LLMs don’t do formal reasoning - and that is a HUGE problem

A superb new article on LLMs from six AI researchers at Apple who were brave enough to challenge the dominant paradigm has just come out.

Everyone actively working with AI should read it, or at least this terrific X thread by senior author, Mehrdad Farajtabar, that summarizes what they observed. One key passage:

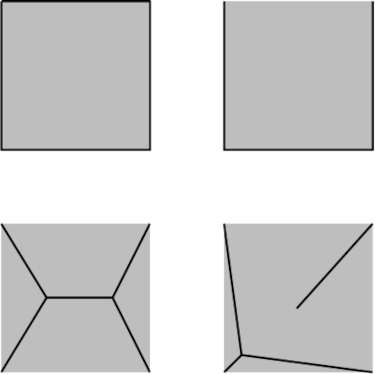

“we found no evidence of formal reasoning in language models …. Their behavior is better explained by sophisticated pattern matching—so fragile, in fact, that changing names can alter results by ~10%!”

This kind of flaw, in which reasoning fails in light of distracting material, is not new. Robin Jia Percy Liang of Stanford ran a similar study, with similar results, back in 2017 (which Ernest Davis and I quoted in Rebooting AI, in 2019:

𝗧𝗵𝗲𝗿𝗲 𝗶𝘀 𝗷𝘂𝘀𝘁 𝗻𝗼 𝘄𝗮𝘆 𝗰𝗮𝗻 𝘆𝗼𝘂 𝗯𝘂𝗶𝗹𝗱 𝗿𝗲𝗹𝗶𝗮𝗯𝗹𝗲 𝗮𝗴𝗲𝗻𝘁𝘀 𝗼𝗻 𝘁𝗵𝗶𝘀 𝗳𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻, where changing a word or two in irrelevant ways or adding a few bit of irrelevant info can give you a different answer.