SimonRennotte / Data-Efficient-Reinforcement-Learning-with-Probabilistic-Model-Predictive-Control

Unofficial implementation of the paper Data-Efficient Reinforcement Learning with Probabilistic Model Predictive Control with Pytorch and GPyTorch.

Trial-and-error based reinforcement learning (RL) has seen rapid advancements in recent times, especially with the advent of deep neural networks. However, the majority of autonomous RL algorithms either rely on engineered features or a large number of interactions with the environment. Such a large number of interactions may be impractical in many real-world applications. For example, robots are subject to wear and tear and, hence, millions of interactions may change or damage the system. Moreover, practical systems have limitations in the form of the maximum torque that can be safely applied. To reduce the number of system interactions while naturally handling constraints, we propose a model-based RL framework based on Model Predictive Control (MPC). In particular, we propose to learn a probabilistic transition model using Gaussian Processes (GPs) to incorporate model uncertainties into long-term predictions, thereby, reducing the impact of model errors. We then use MPC to find a control sequence that minimises the expected long-term cost. We provide theoretical guarantees for the first-order optimality in the GP-based transition models with deterministic approximate inference for long-term planning. The proposed framework demonstrates superior data efficiency and learning rates compared to the current state of the art.

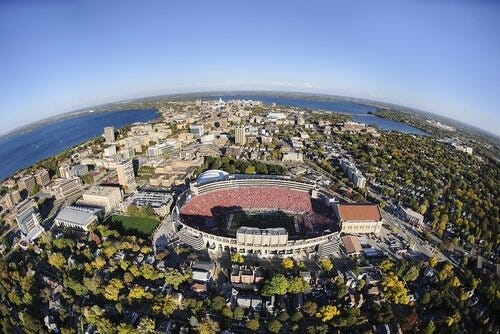

3d plots showing the Gaussian processes models and points in memory. In this plot, each of the graphs of the top line represents the change in states for the next step as a function of the current states and actions. The indices represented in the xy axis name represent either states or actions. For example, the input with index 3 represent the action for the pendulum. Action indices are defined as higher than the state indices. As not every input of the gp can be shown on the 3d graph, the axes of the 3d graph are chosen to represent the two inputs (state or action) with the smallest lengthscales. So, the x-y axes may be different for each graph. The graphs of the bottom line represent the predicted uncertainty, and the points are the prediction errors. The points stored in the memory of the Gaussian process model are shown in green, and the points that are not stored because they were too similar to other points already in memory are represented in black.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25255988/246965_vision_pro_AKrales_0140.jpg)