Search code, repositories, users, issues, pull requests...

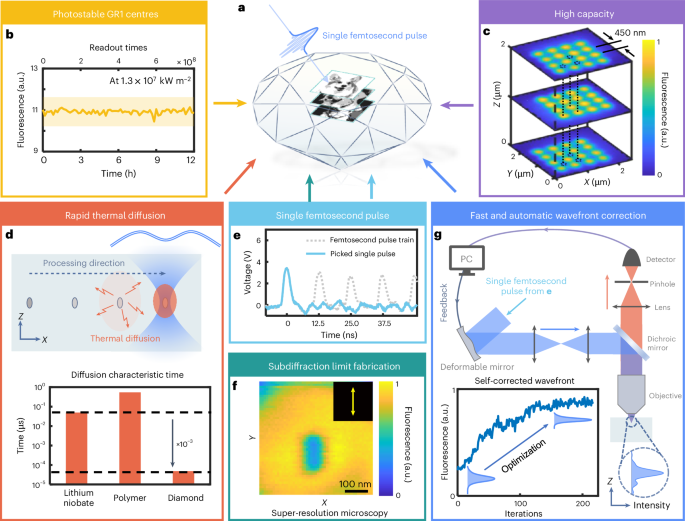

Unlike traditional frameworks which rely on predefined static selectors, this API uses AI vision to interpret the screen. This makes it more resilient to UI changes and able to automate interfaces that traditional tools can't handle.

George uses Molmo, a vision-based LLM, to identify UI elements by converting natural language descriptions into screen coordinates which are then used to execute computer interactions.

Alternatively, you can run Molmo on bare metal, which can reduce the GPU memory consumption down to ~18GB or even ~12GB by leveraging bitsandbytes. Here are some example projects:

This is George. Most of the time he does what he's supposed to, but sometimes he doesn't do the right thing at all. He's a living embodiment of current AI expectations.