Open Source and OpenAI’s Return - GizVault

OpenAI just dropped GPT‑OSS, its first open‑weight model family since GPT‑2: a 120B variant and a 20B variant that users can run locally — even on laptops or high-end desktops. Both are released under Apache 2.0, and perform nearly as well as OpenAI’s previous o3‑mini and o4‑mini models.

This isn't a marketing stunt — it's significant. It marks OpenAI’s return to releasing weights that anyone can inspect, modify, and self-host. That shift came only after competitors like DeepSeek and Meta released real open‑source or open‑weight models. DeepSeek’s R1 model, launched in January 2025, was fully open and cost‑efficient, quickly outperforming proprietary systems and grabbing global attention. OpenAI’s move followed that disruption.

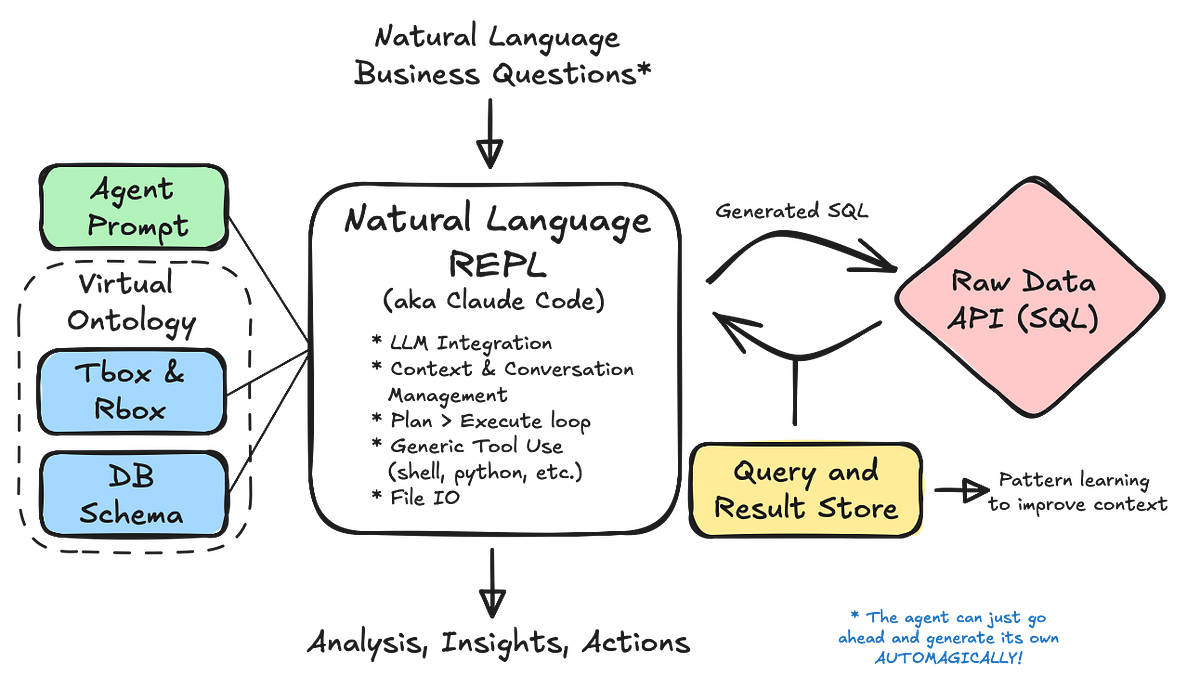

Open‑weight and open‑source aren’t the same, OpenAI’s GPT‑OSS lacks full training datasets or source code. But the access to model internals and usable weights still opens the door for developers and hackers to experiment offline, inspect biases, and build custom apps without depending on APIs.