Hung Truong: The Blog!

It’s been… one month since I purchased a Claude Pro subscription so I could try Claude Code instead of freeloading off of Google Gemini’s CLI. I thought I would take a look at the things I learned while vibe coding a few projects, and other uses that I found for Claude Code besides coding. If you missed it, be sure to check out my initial post about vibe coding, and the followup about how Claude Code was definitely better than Gemini (for now)!

So there’s a lot of talk about how prompt engineering is dead, and “context engineering” is the new hotness. That makes a lot of sense to me, as I ran into this issue constantly while using Claude Code, and to a lesser degree, with Gemini CLI. To understand context, let me first give you some… context.

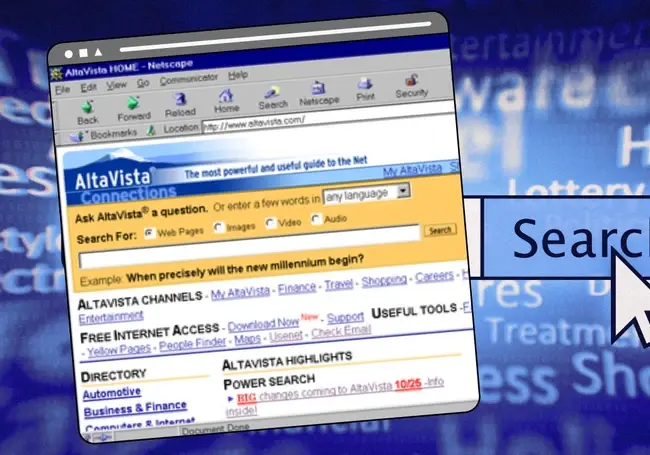

The way that LLMs work is that there is a pre-trained model with weights (the ‘P’ in ‘GPT’ stands for pre-trained). Most of these applications are next token predictors. So if I input: “Welcome to McDonald’s, how may I help”, the next token would probably be “you”. Before there was ChatGPT, there was the text completion API (does anyone remember using davinci-002?).