D r a g - a n d - D r o p L L M s : Zero-Shot Prompt-to-Weights

Despite strong zero-shot competence endowed by pre-training, Large Language Models (LLMs) still require task-specific customization for real-world applications. Parameter-Efficient Fine-Tuning (PEFT), such as LoRA, addresses this by introducing a small set of trainable parameters while keeping original weights frozen. However, it can only alleviate but not erase the cost of per-task-tuning, creating a major bottleneck for large-scale deployment.

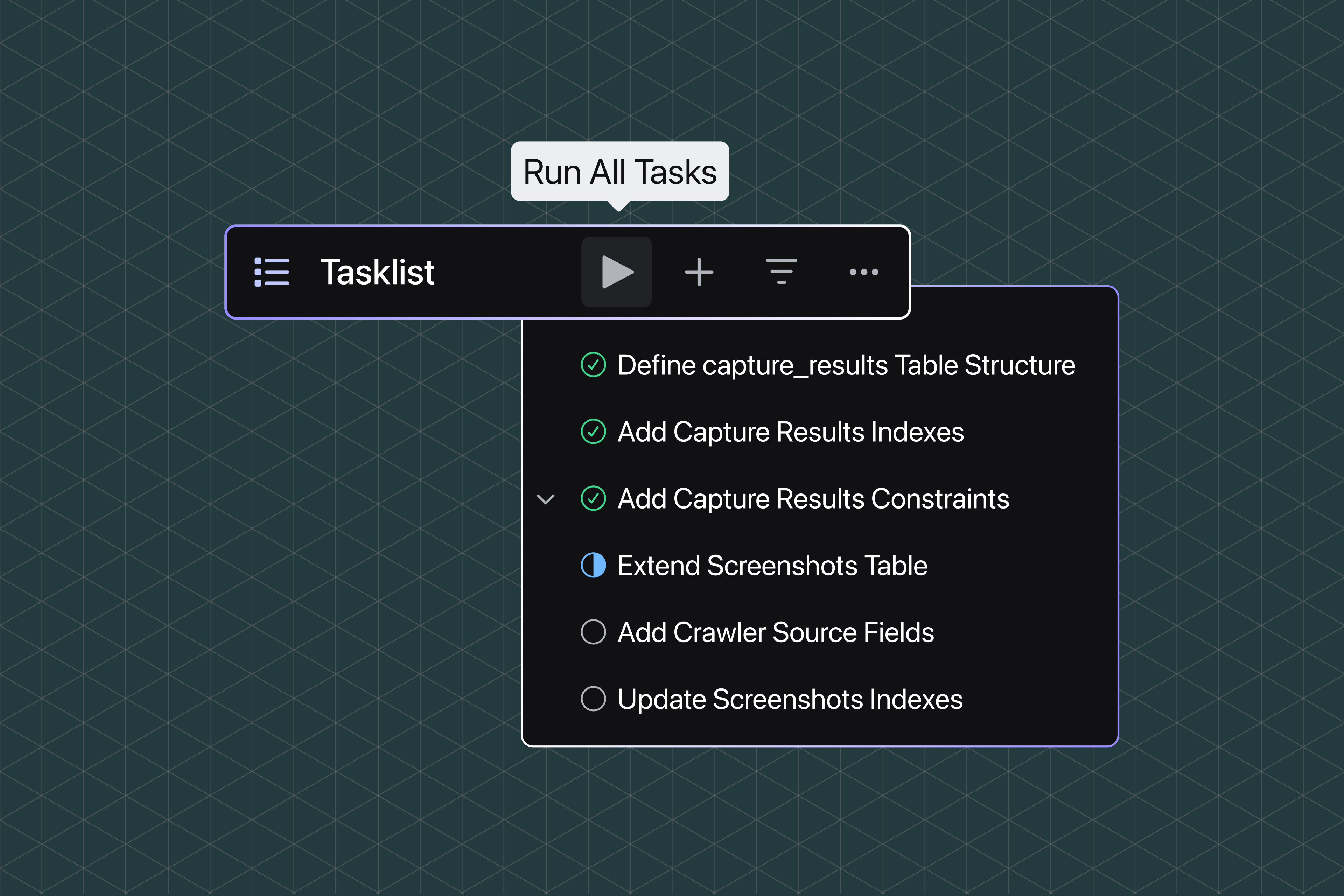

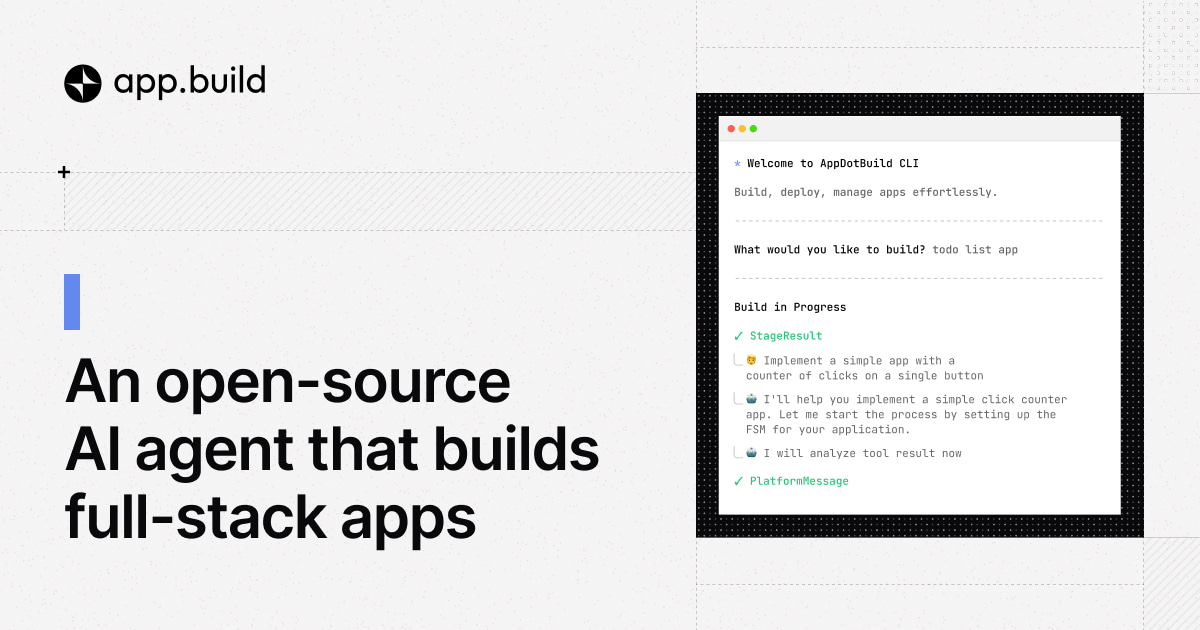

We observe that a LoRA adapter is nothing more than a function of its training data: gradient descent “drags” the base weights towards a task-specific optimum. If that mapping from prompts to weights can be learned directly, we could bypass gradient descent altogether.

Utilizing fine-tuned LoRAs as training data, D n D establishes connections between input data prompts and model parameters. We test D n D 's zero-shot ability by feeding it with prompts from datasets unseen in training and instruct it to generate parameters for novel datasets. Our method shows amazing improvment over the average of training LoRAs on zero-shot test sets, generalizes to multiple real-world tasks, and scales to various LLM sizes.

%20(1).png)