How well can LLMs see?

I created Wimmelbench, a benchmark to test how well multimodal language models can find specific objects in complex illustrations. The best model (Gemini Pro) could accurately describe objects 31% of the time but struggled with precise location marking. Models performed better with larger objects and showed varying tendencies to hallucinate non-existent items.

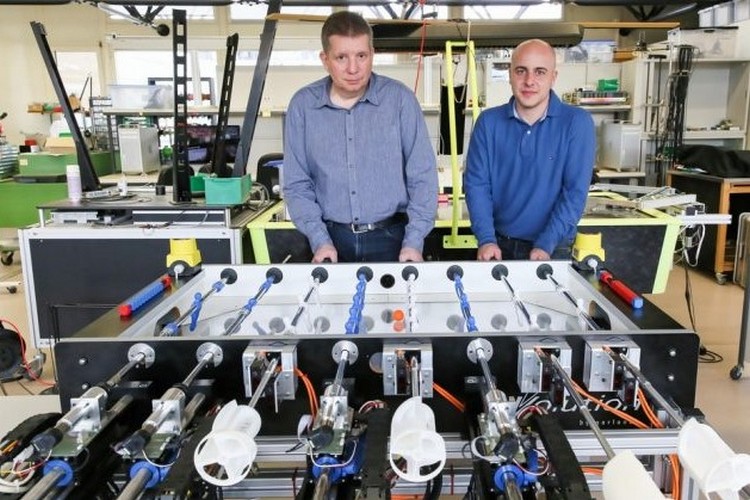

I was recently reminded of old picture books I used to own. The illustrations inside were completely hand-drawn, richly detailed scenes, packed with dozens of people, overlapping objects, and multiple activities — reminiscent of Where’s Waldo, except that you’re not hunting for a particular character.

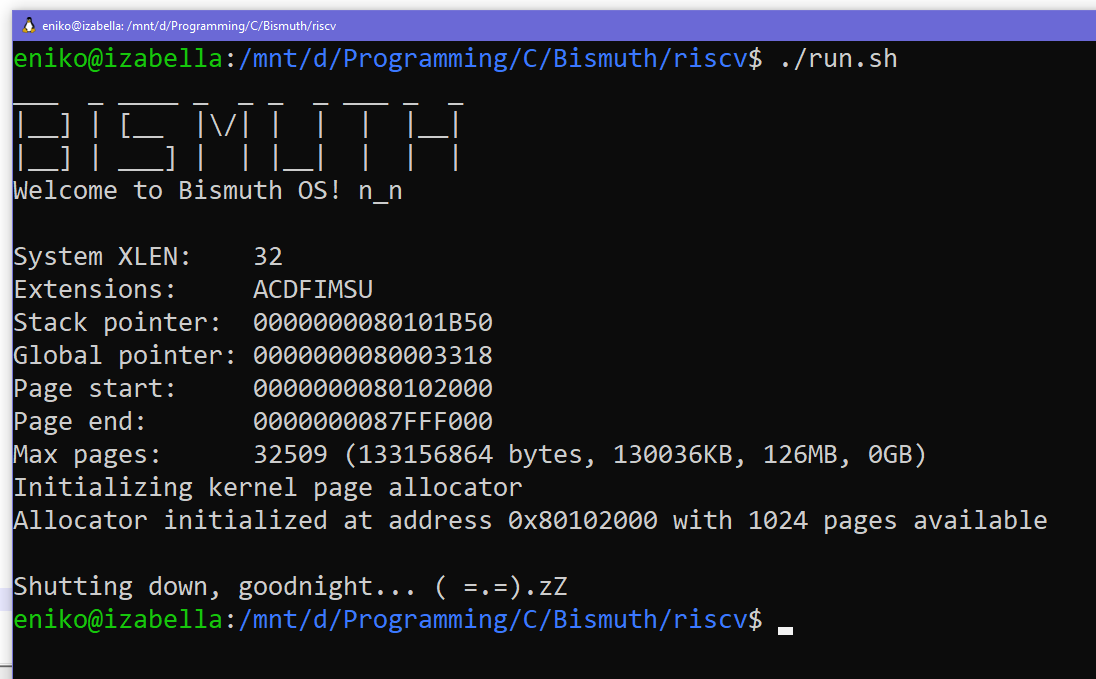

While I’m convinced that LLMs can really “read” language, I’m less sure that they “see” images. To measure sight quantitatively, I created a small benchmark that I’m calling Wimmelbench.

Wimmelbench takes inspiration from needle in a haystack. In that benchmark, a random fact (the needle) is inserted into the middle of a large piece of text (the haystack), and an LLM is asked to retrieve the fact. Most LLMs these days score close to 100% on this task. Wimmelbench is the image analogue: a model is asked to describe a small object (the needle) in a complex scene (the haystack) and draw a bounding box around it.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25646313/STK485_STK414_AI_SAFETY_B.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25749943/VRG_illo_Kristen_Radtke_bluesky_winning.jpg)