SF Compute and Modular Partner to Revolutionize AI Inference Economics

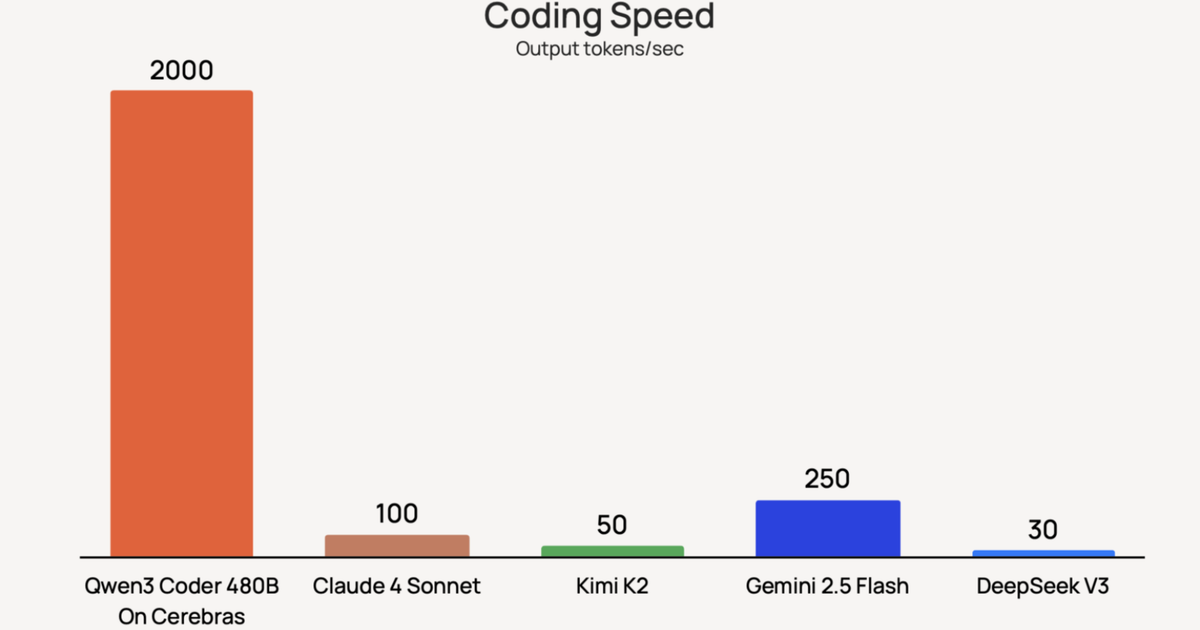

Modular has partnered with SF Compute to address a fundamental asymmetry in the AI ecosystem: while model capabilities advance exponentially, the economic structures governing compute costs remain anchored in legacy paradigms.

We’re excited to launch the Large Scale Inference Batch API – a high-throughput, asynchronous interface built for large-scale offline inference tasks like data labeling, summarization, and synthetic generation. At launch, it supports 20+ state-of-the-art models across language, vision, and multimodal domains, from efficient 1B models to 600B+ frontier systems. Powered by Modular’s high-performance inference stack and SF Compute’s real-time spot market, the API delivers up to 80% lower cost than typical market alternatives.

Try it today - we’re offering 10M’s of batch inference tokens for free to the first 100 new customers that get started now.