Comparing Refusal Behavior Across Top Language Models

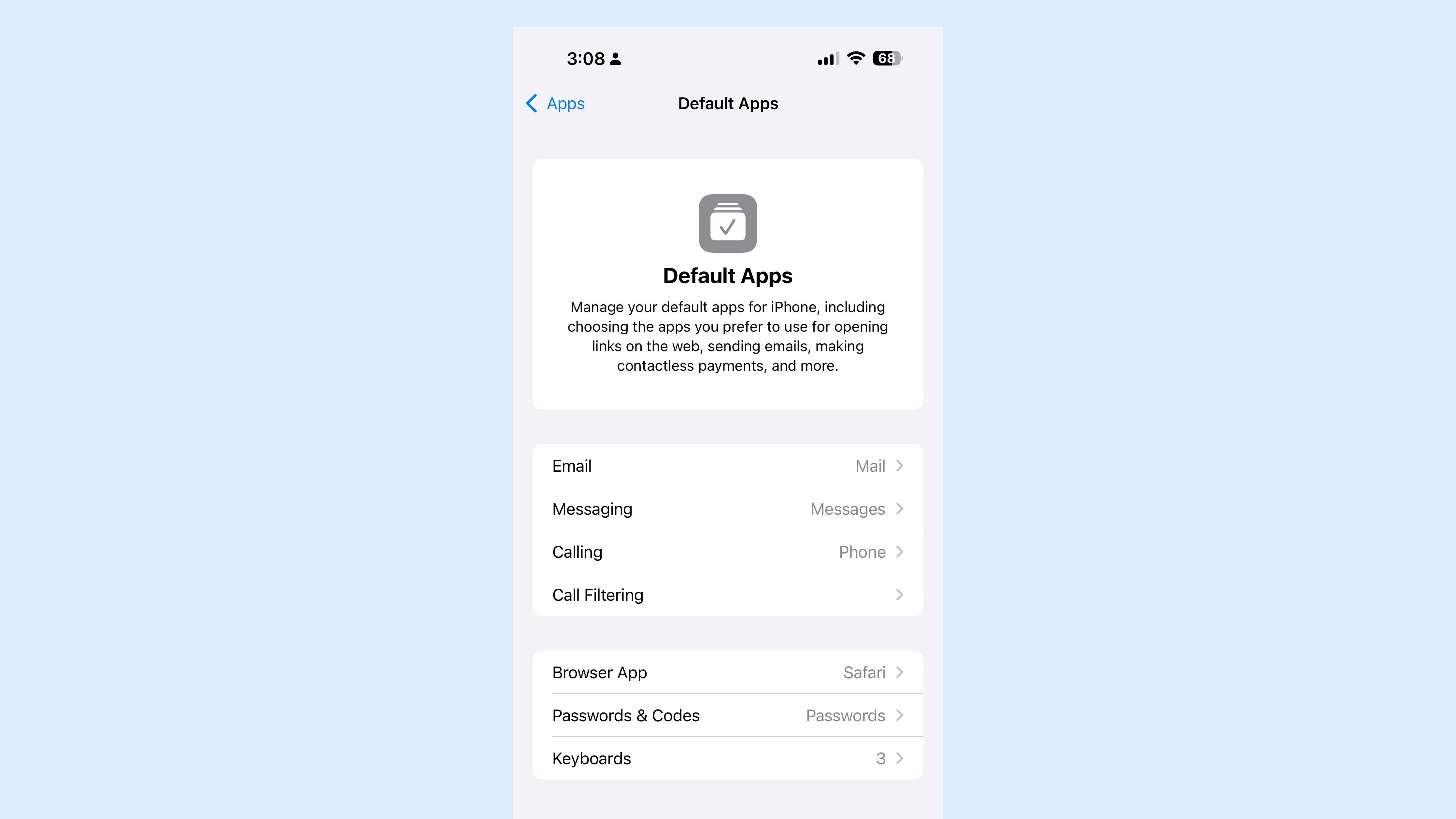

These findings highlight the current lack of standardization in AI safety handling and content filtering strategies across different model families.

One key aspect of this understanding is the analysis of model refusals – instances where an AI model refuses or fails to engage with a particular instruction.

In this post, we evaluated and compared refusal behavior across a set of top language models, providing insights into their relative strengths, weaknesses, and unique characteristics.

By analyzing refusal behaviors, we aim to provide insights that can help guide improvements in both model reliability and end-user satisfaction for both model developers and product teams alike.

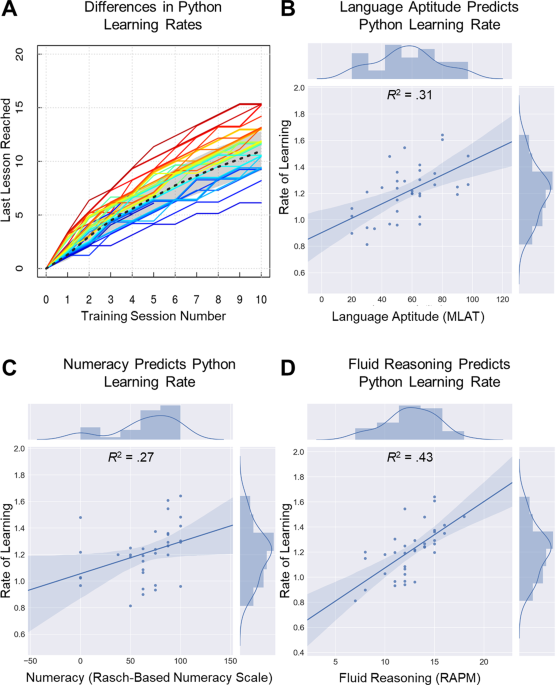

We developed a private test set (in an effort to avoid the data contamination (opens in a new tab) problem) of 400 prompts designed to evaluate various aspects of LLM reasoning. These prompts covered 8 distinct categories, with 50 prompts per category.

While the primary purpose of this dataset is to evaluate reasoning capabilities, here we used it to assess refusal behaviors across these prompt categories. (A direct analysis of the models' performance on these reasoning tasks is coming soon...)