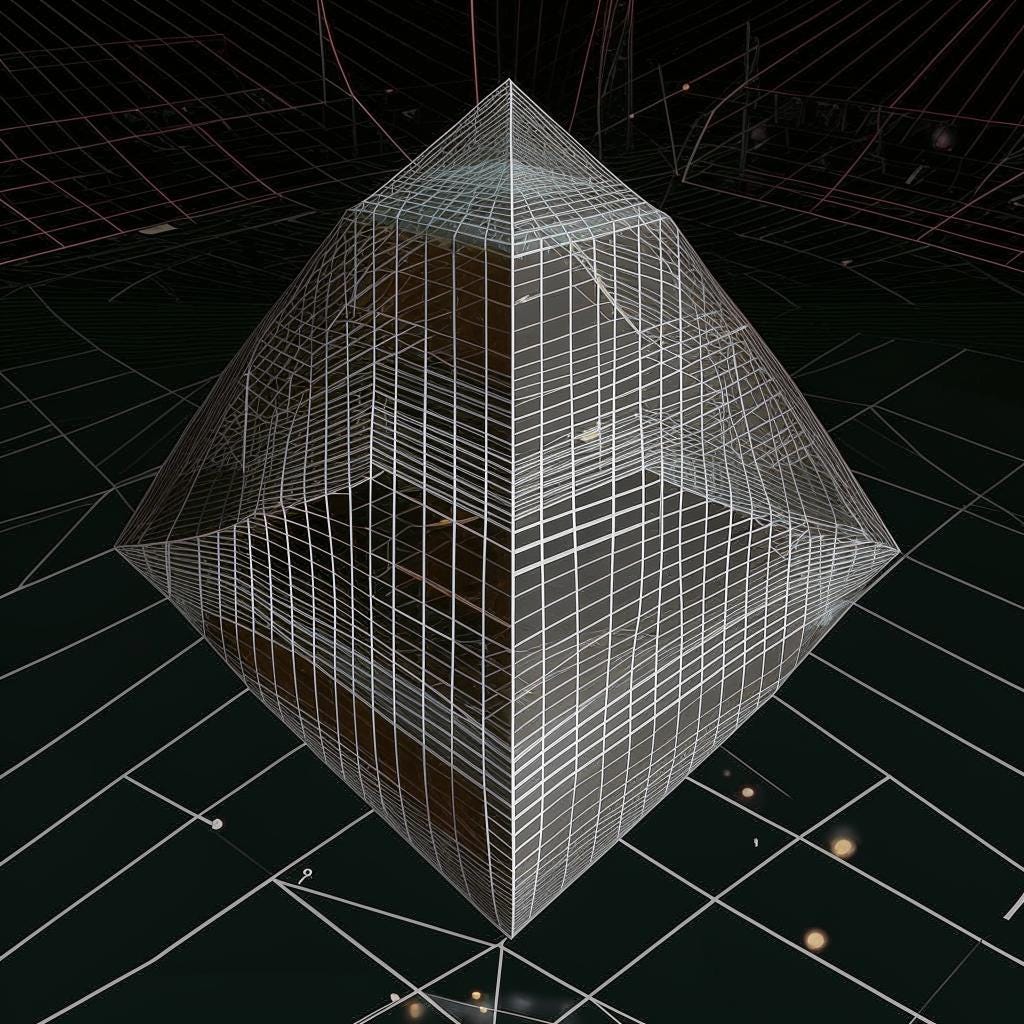

LLM as an n-dimensional Object in n-dimensional Space

It seems that any language model, regardless of whether it is built on symbolic or neural network architecture, can be conceptualised as an n-dimensional object in an n-dimensional space. Initially, this object is constructed using training sequences (datasets).

Once we have a sufficiently formed topology of such an object (surface), “understanding” of the prompt can be considered as an approximation of the surfaces of such an n-dimensional object. In some cases, it may be perfect, but sometimes it will be necessary to resort to some transformations to find the correct way of approximation (a similar topology of the surface). Fine-tuning and retraining are considered as modifications of the surface.

What about predictive capability? It is ensured by the shape of the surface of such an object. Then, generative abilities will be provided by the ability to move along the surface of the n-dimensional object from any point to other points of the surface in any permissible direction.

From this, it can be concluded that the selection of such points should not be determined solely by the language model itself but rather by some generalisation derived from it. Which begs the question of whether a language model alone is adequate for the emergence of cognition and reasoning. Mathematically, seems it is not.