Why I’m excited about the Hierarchical Reasoning Model

Since the arrival of Chat GPT, I’ve felt that progress in AI has been largely incremental. Of course, even Chat GPT was/is mostly just a scaled up Transformer model, which was introduced by Vaswani et al in 2017.

The belief that AI is advancing relatively slowly is a radical and unpopular opinion these days, and it’s been frustrating defending that view while most of the Internet talks excitedly about the latest AI Large-Language Models (LLMs).

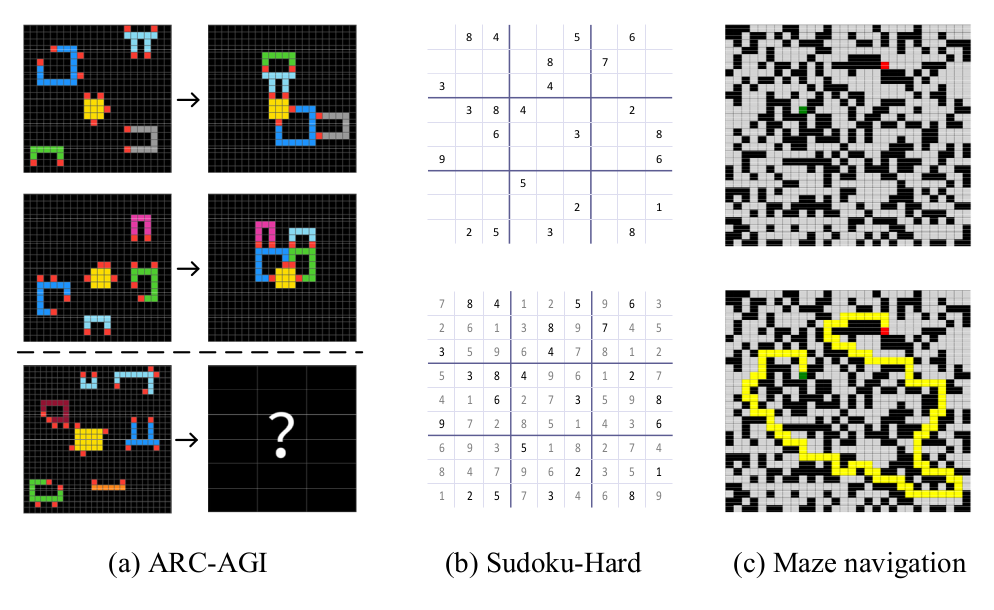

But in my opinion the new Hierarchical Reasoning Model by Wang et al is a genuine leap forward. Their model is the first one which seems to have the ability to think. In addition — or perhaps because of this capability — the model is also extremely efficient in terms of required training samples, trainable parameter count and computational resources such as memory, because they use relatively local gradient propagation without Back-Propagation-Through-Time (BPTT), or equivalent.

Researchers have been trying for a while now to build models with increased reasoning capabilities. Some improvement has been achieved through Chain-of-Thought (CoT) prompting and related techniques to induce LLMs to systematically analyze a problem while generating their response. Researchers have also tried adding “thinking tokens” to induce more deliberative output. They have also designed “Agentic AI architectures”, which aim to allow AIs to tackle a broad range of user problems more independently. However, Agentic architectures almost always involve human engineers inventing a way to break down and frame broad problems into specific sub-problems which will the AI will find more manageable, helping it remain task-focused. Who is doing the thinking here?