Using clustering and summarization algorithms for news aggregation

The internet is filled with media tricks such as clickbait articles, unnecessarily long texts, false information, and many more that waste the human’s most valuable resource - time. People need to be informed in concise, short articles that provide high informational value. This post explores different clustering models paired with summarization algorithms for creating a news aggregator website.

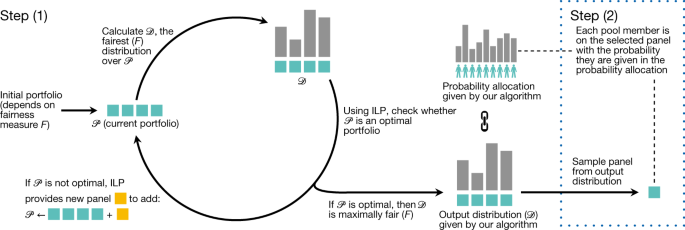

The main idea of this aggregation task is to transform how news is presented, so that they are short, informative, and ranked by importance. This can be achieved by showing the most popular clusters of information first and displaying the top 3 sentences that best describe that cluster. The problem can be split into five distinct parts:

I have compiled a list of around 40 RSS URLs of the most popular media sources in Macedonian. The URLs are for local, economic, political, and world news. Each URL on average contains 20 news articles, so in the end, there are approximately 800 articles for further processing. The scraping is done with BeautifulSoup4 and regex expressions for cleaning the text. The whole process is very straightforward so I will not be including the code here, but you can find it on my GitHub.

Clustering algorithms are unsupervised machine learning models tasked with grouping similar data points together. In this news aggregation problem, that would mean grouping news articles that belong to the same topic. Several algorithms can be used for this task.

Leave a Comment

Related Posts