Exploring LoRA — Part 1: The Idea Behind Parameter Efficient Fine-Tuning and LoRA

Pre-trained large language models undergo extensive training on vast data from the internet, resulting in exceptional performance across a broad spectrum of tasks. Nonetheless, in most real-world scenarios, there arises a necessity for the model to possess expertise in a particular, specialized domain. Numerous applications in the fields of natural language processing and computer vision rely on the adaptation of a single large-scale, pre-trained language model for multiple downstream applications. This adaptation process is typically achieved through a technique called fine-tuning, which involves customizing the model to a specific domain and task. Consequently, fine-tuning the model becomes vital to achieve highest levels of performance and efficiency on downstream tasks. The pre-trained models serve as a robust foundation for the fine-tuning process which is specifically tailored to address targeted tasks.

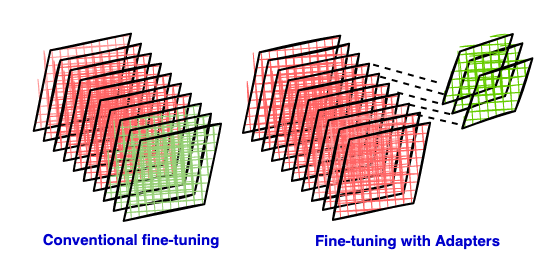

During the conventional fine-tuning of deep neural networks, modifications are applied to the top layers of the network, while the lower layers remain fixed or “frozen”. This is necessary because the label spaces and loss functions for downstream tasks often differ from those of the original pre-trained model. However, a notable drawback of this approach is that the resulting new model retains the same number of parameters as the original model, which can be quite substantial. Nonetheless, creating a separate full model for each downstream task may not be efficient when working with contemporary large language models (LLMs).