Understanding CLIP for vision language models

This article explains CLIP, a model that sets the foundation for most vision encoders in vision-language models. CLIP was one of the first approaches to integrate vision understanding to language models.

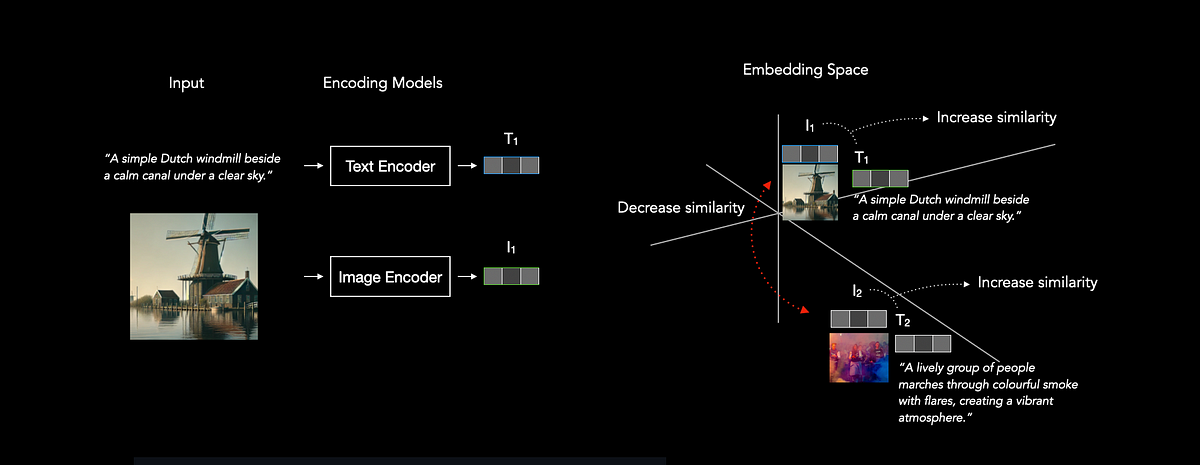

The key idea is to bring a text that describes an image close together with the image itself in the embedding space (often referred to as latent space). We take the text embedding and the corresponding image embedding and ensure they are very close in this space, meaning they represent a similar concept.

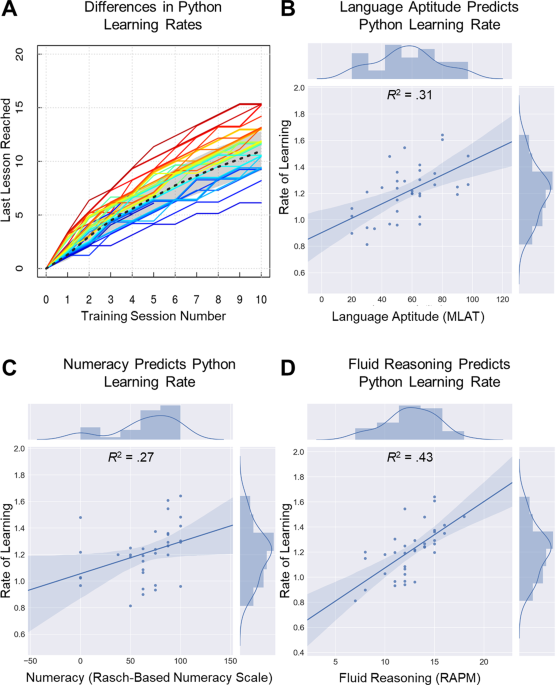

The following illustration demonstrates this process. Note that the embeddings are n-dimensional, not just 3-dimensional. However, I have chosen 3-dimensional embeddings for illustration purposes.

Clip is one of the foundation models in self supervised learning. But how do we maximise the similarity between a correct image-text pair?

The key idea behind InfoNCE is to increase the similarity between correct image-text pairs while penalising a high similarity of incorrect pairs. There are N² − N incorrect pairings in total. Take the following code to get an overview of what is happening: