Decoding Vector Search: The Secret Sauce Behind Smarter Data Retrieval

Memgraph recently released vector search as a new feature. We’ve talked about it here Simplify Data Retrieval with Memgraph’s Vector Search.

I wanted to take a moment and go back to explain the backstory to vector search and why it’s important from a perspective of a technical content writer who’s job is to translate engineerish into plain English. Vector search is growing in popularity due to its role in AI-driven applications, semantic search, and the need to process massive amounts of unstructured data effectively.

With the rise of large-scale, unstructured datasets and modern AI applications, vector search opens up the ability to query and analyze data contextually, overcoming these limitations.

However, there’s a trade-off—vector search can yield non-deterministic results. As new data is added, the results for the same query vector might significantly change due to shifts in the vector space.

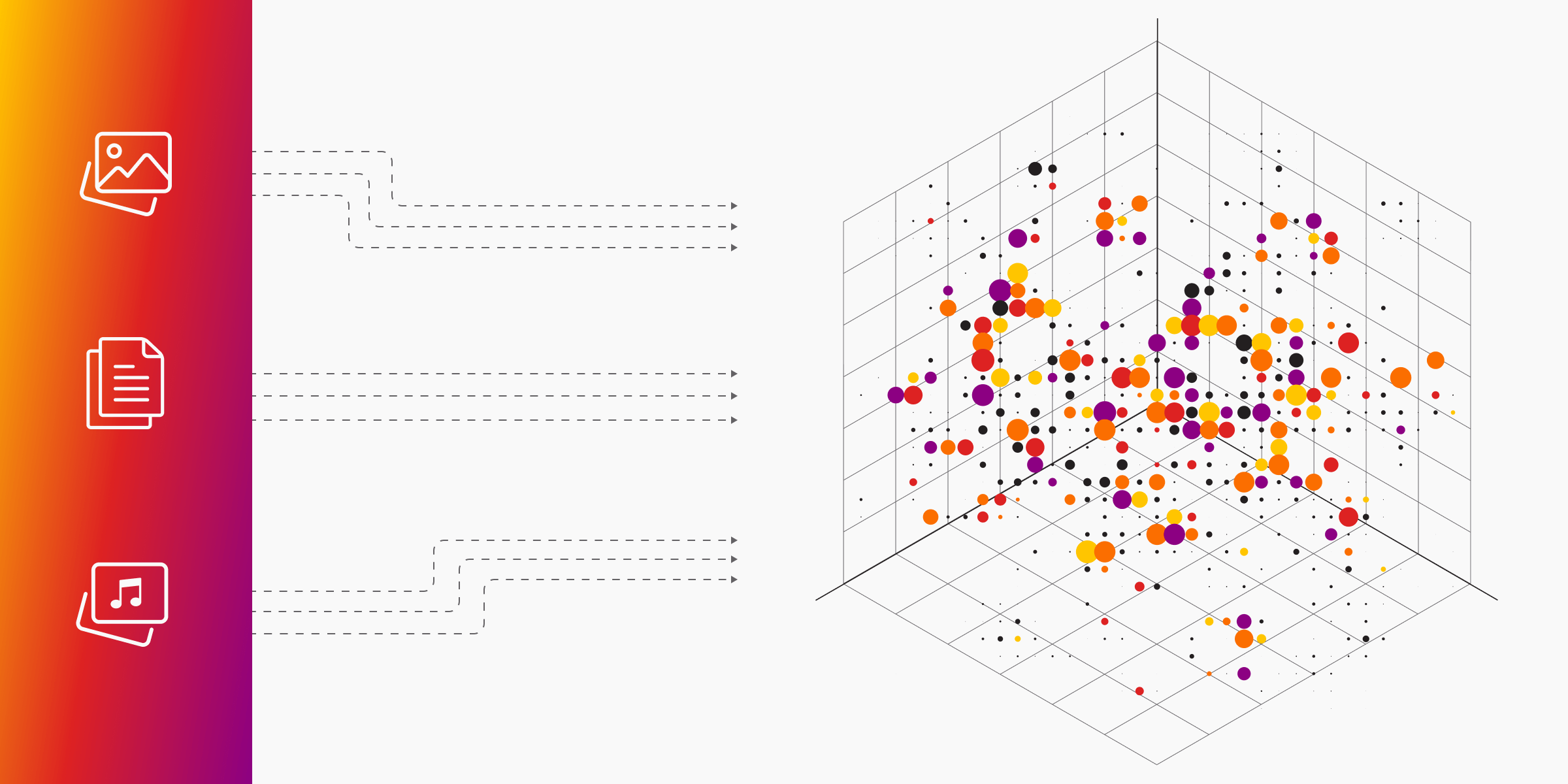

The magic lies in embedding models powered by neural networks. Vector embeddings are mathematical representations of data (like text, images, or audio) in a high-dimensional space. They transform unstructured data into vectors, enabling machines to process and understand it. Essentially, embeddings allow machines to grasp the context and meaning of data, making it computationally manageable and useful.