Windows Agent Arena: Evaluating Multi-modal OS Agents at Scale

Large language models (LLMs) show remarkable potential to act as computer agents, enhancing human productivity and software accessibility in multi-modal tasks that require planning and reasoning. However, measuring agent performance in realistic environments remains a challenge since:

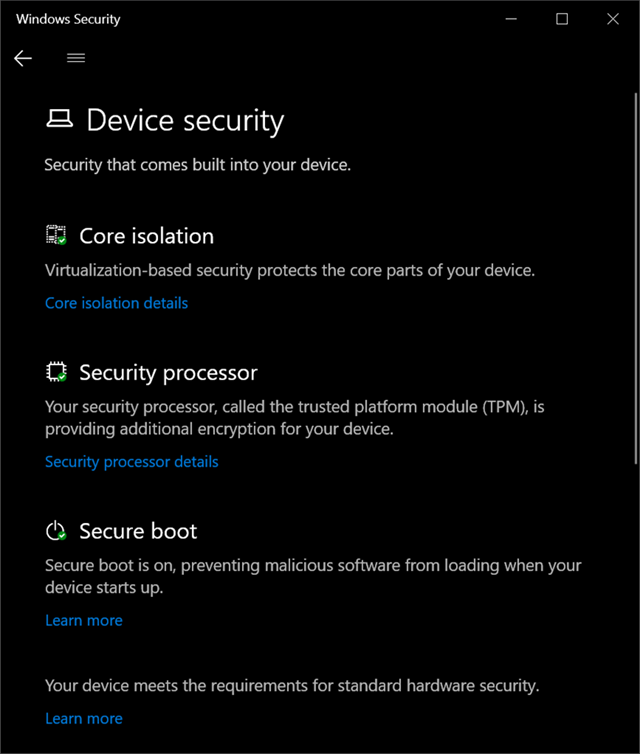

To address these challenges, we introduce the WindowsAgentArena (WAA): a reproducible, general environment focusing exclusively on the Windows operating system (OS) where agents can operate freely within a real Windows OS and use the same wide range of applications, tools, and web browsers available to human users when solving tasks. We adapt the OSWorld framework to create 150+ diverse Windows tasks across representative domains that require agent abilities in planning, screen understanding, and tool usage. Our benchmark is also scalable and can be seamlessly parallelized in Azure for a full benchmark evaluation in as little as 20 minutes.

To demonstrate WAA's capabilities, we also introduce a new multi-modal agent, Navi, showing it can achieve a success rate of 19.5%, compared to 74.5% for human performance. In addition, we show Navi's strong performance on another popular web-based benchmark, Mind2Web.