Automated Malfare - discriminatory effects of welfare automation (Relive)

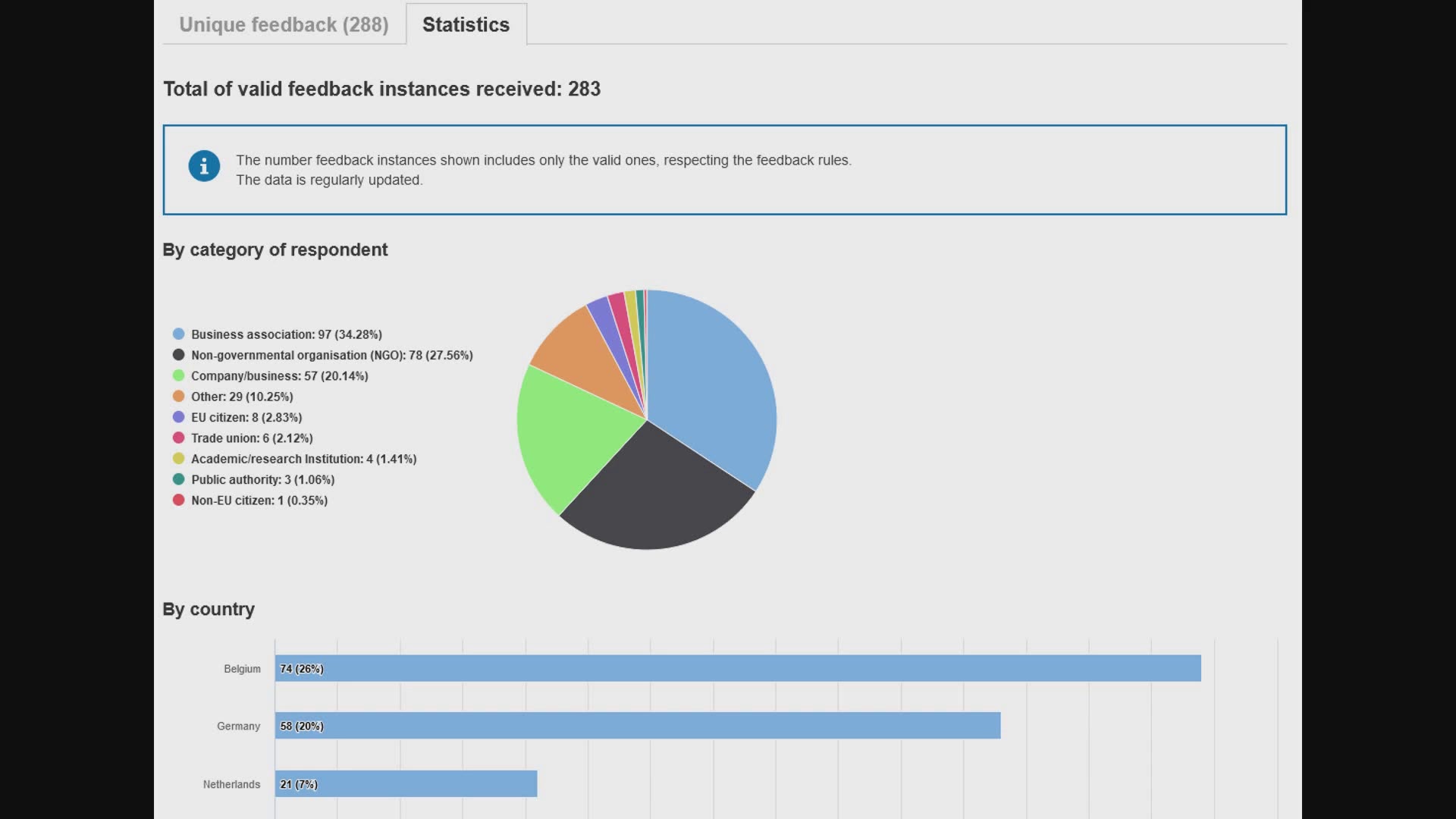

An increasing number of countries is implementing algorithmic decision-making and fraud detection systems within their social benefits system. Instead of improving decision fairness and ensuring effective procedures, these systems often reinforce preexisting discriminations and injustices. The talk presents case studies of automation in the welfare systems of the Netherlands, India, Serbia and Denmark, based on research by Amnesty International.

Social security benefits provide a safety net for those who are dependent on support in order to make a living. Poverty and other forms of discrimination often come together for those affected. But what happens, when states decide to use Social Benefit Systems as a playground for automated decision making? Promising more fair and effective public services, a closer investigation reveals reinforcements of discriminations due to the kind of algorithms and quality of the input data on the one hand and a large-scale use of mass surveillance techniques in order to generate data to feed the systems with on the other hand.

Amnesty International has conducted case studies in the Netherlands, India, Serbia and, most recently, Denmark. In the Netherlands, the fraud detection algorithm under investigation in 2021 was found to be clearly discriminatory. The algorithm uses nationality as a risk factor, and the automated decisions went largely unchallenged by the authorities, leading to severe and unjustified subsidy cuts for many families. The more recent Danish system takes a more holistic approach, taking into account a huge amount of private data and some dozens of algorithms, resulting in a system that could well fall under the EU's own AI law definition of a social scoring system, which is prohibited. In the cases of India and Serbia, intransparency, problems with data integrity, automation bias and increased surveillance have also led to severe human rights violations.

Leave a Comment

Related Posts