‘Fighting fire with fire’ — using LLMs to combat LLM hallucinations

Karin Verspoor is in the School of Computing Technologies, RMIT University, Melbourne, Victoria 3000, Australia and in the School of Computing and Information Systems, University of Melbourne, Melbourne, Victoria 3010, Australia.

You can also search for this author in PubMed Google Scholar

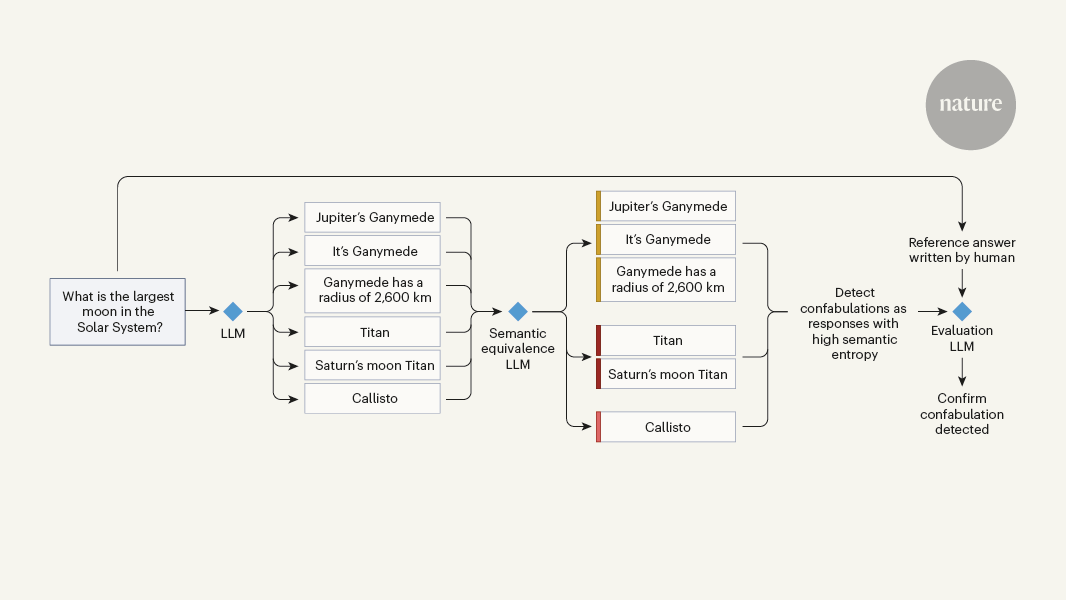

Text-generation systems powered by large language models (LLMs) have been enthusiastically embraced by busy executives and programmers alike, because they provide easy access to extensive knowledge through a natural conversational interface. Scientists too have been drawn to both using and evaluating LLMs — finding applications for them in drug discovery1, in materials design2 and in proving mathematical theorems3. A key concern for such uses relates to the problem of ‘hallucinations’, in which the LLM responds to a question (or prompt) with text that seems like a plausible answer, but is factually incorrect or irrelevant4. How often hallucinations are produced, and in what contexts, remains to be determined, but it is clear that they occur regularly and can lead to errors and even harm if undetected. In a paper in Nature, Farquhar et al.5 tackle this problem by developing a method for detecting a specific subclass of hallucinations, termed confabulations.

Zhang, T., Kishore, V., Wu, F., Weinberger, K. Q. & Artzi, Y. In 8th Int.Conf.Learning Represent. (ICLR, 2020); available at https://openreview.net/forum?id=SkeHuCVFDr

/cdn.vox-cdn.com/uploads/chorus_asset/file/25501065/DSCF7721.jpg)