How should we test AI for human-level intelligence? OpenAI’s o3 electrifies quest

You can also search for this author in PubMed Google Scholar

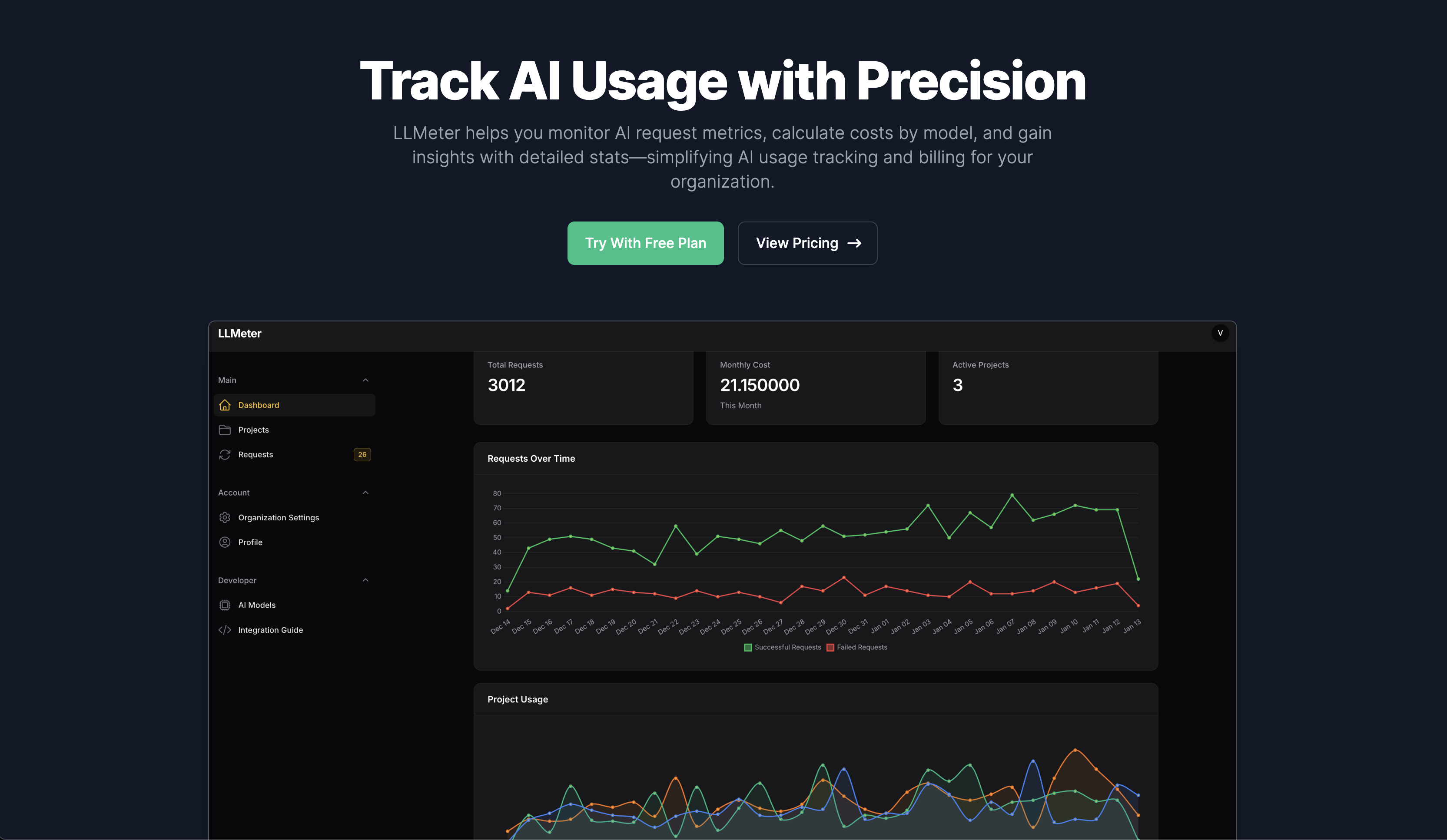

The technology firm OpenAI made headlines last month when its latest experimental chatbot model, o3, achieved a high score on a test that marks progress towards artificial general intelligence (AGI). OpenAI’s o3 scored 87.5%, trouncing the previous best score for an artificial intelligence (AI) system of 55.5%.

This is “a genuine breakthrough”, says AI researcher François Chollet, who created the test, called Abstraction and Reasoning Corpus for Artificial General Intelligence (ARC-AGI)1, in 2019 while working at Google, based in Mountain View, California. A high score on the test doesn’t mean that AGI — broadly defined as a computing system that can reason, plan and learn skills as well as humans can — has been achieved, Chollet says, but o3 is “absolutely” capable of reasoning and “has quite substantial generalization power”.

Researchers are bowled over by o3’s performance across a variety of tests, or benchmarks, including the extremely difficult FrontierMath test, announced in November by the virtual research institute Epoch AI. “It’s extremely impressive,” says David Rein, an AI-benchmarking researcher at the Model Evaluation & Threat Research group, which is based in Berkeley, California.