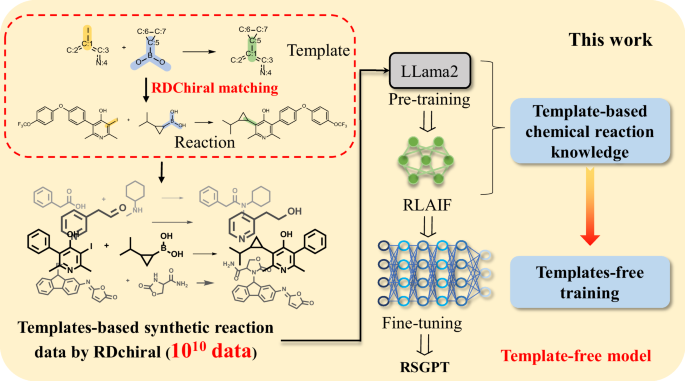

RSGPT: a generative transformer model for retrosynthesis planning pre-trained on ten billion datapoints

Nature Communications volume 16, Article number: 7012 (2025 ) Cite this article

Retrosynthesis planning is a crucial task in organic synthesis, and deep-learning methods have enhanced and accelerated this process. With the advancement of the emergence of large language models, the demand for data is rapidly increasing. However, available retrosynthesis data are limited to only millions. Therefore, we pioneer the utilization of the template-based algorithm to generate chemical reaction data, resulting in the production of over 10 billion reaction datapoints. A generative pretrained transformer model is subsequently developed for template-free retrosynthesis planning by pre-training on 10 billion generated data. Inspired by the strategies of large language models, we introduce reinforcement learning to capture the relationships among products, reactants, and templates more accurately. Experiments demonstrate that our model achieves state-of-the-art performance on the benchmark, with a Top-1 accuracy of 63.4%, substantially outperforming previous models.

Predicting the reactants of organic reactions and planning retrosynthetic routes are fundamental problems in chemistry. On the basis of well-established knowledge from organic chemistry, experienced chemists can design routes for synthesizing target molecules possessing desired properties. However, retrosynthesis planning is still a challenging problem because of the huge chemical space1,2 of possible reactants and insufficient understanding of chemical reaction mechanisms. In recent decades, computer-aided synthesis-planning methods have developed in tandem with artificial intelligence (AI) and have assisted chemists in programming synthetic routes3,4,5,6.

.jpg)