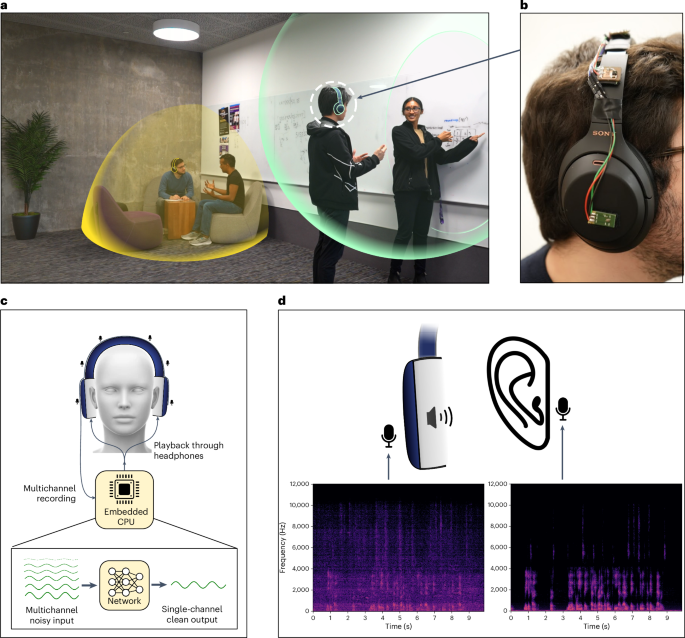

Hearable devices with sound bubbles

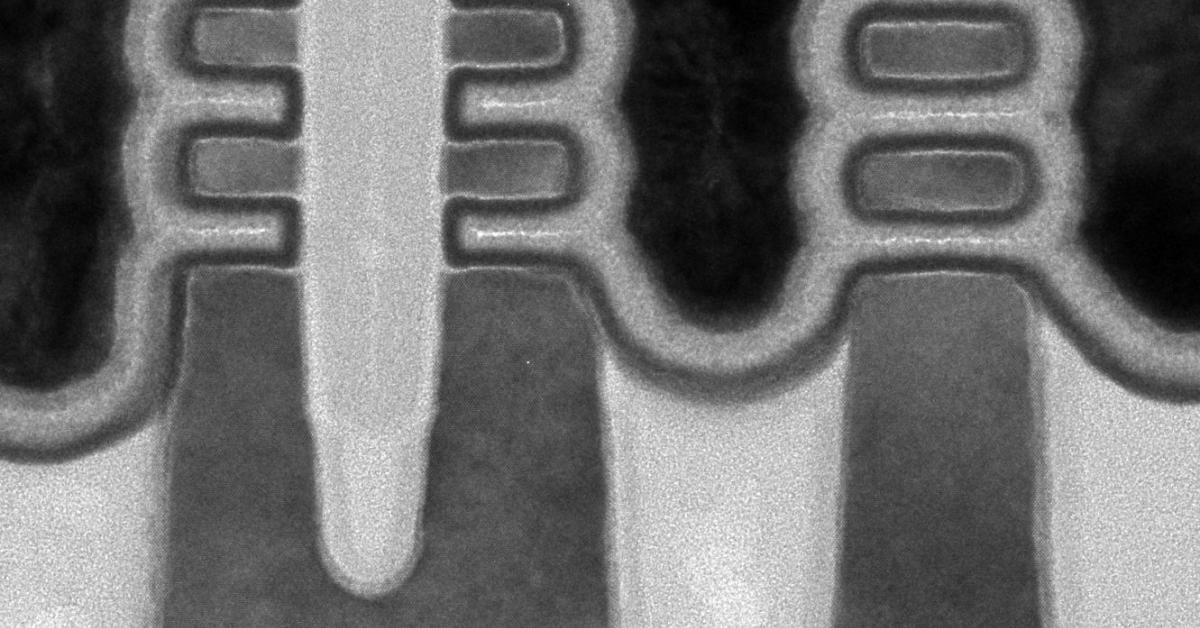

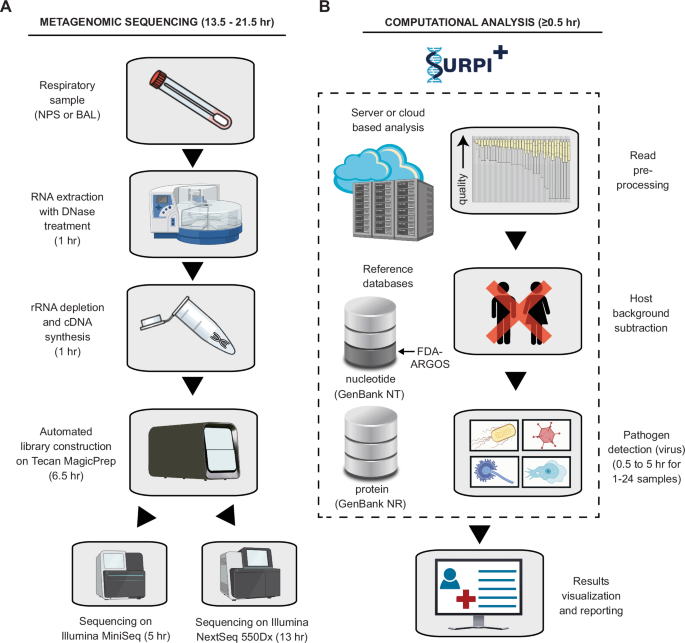

The human auditory system has a limited ability to perceive distance and distinguish speakers in crowded settings. A headset technology that can create a sound bubble in which all speakers within the bubble are audible but speakers and noise outside the bubble are suppressed could augment human hearing. However, developing such technology is challenging. Here, we report an intelligent headset system capable of creating sound bubbles. The system is based on real-time neural networks that use acoustic data from up to six microphones integrated into noise-cancelling headsets and are run on the device, processing 8 ms audio chunks in 6.36 ms on an embedded central processing unit. Our neural networks can generate sound bubbles with programmable radii between 1 m and 2 m, and with output signals that reduce the intensity of sounds outside the bubble by 49 dB. With previously unseen environments and wearers, our system can focus on up to two speakers within the bubble, with one to two interfering speakers and noise outside the bubble.

The LibriTTS dataset is available at https://www.openslr.org/60 and the WHAM! dataset is available at http://wham.whisper.ai/. All the synthetic datasets are available via Dryad at https://doi.org/10.5061/dryad.r7sqv9smv (ref. 44). The real-world datasets are available from the corresponding authors upon request. Source data are provided with this paper.