A Look at Baidu’s Industrial-Scale GPU Training Architecture

Like its U.S. counterpart, Google, Baidu has made significant investments to build robust, large-scale systems to support global advertising programs. As one might imagine, AL/ML has been playing a central role in how these systems are built. Massive GPU-accelerated clusters on par with the world’s most powerful supercomputers are the norm and advances in AI efficiency and performance are paramount.

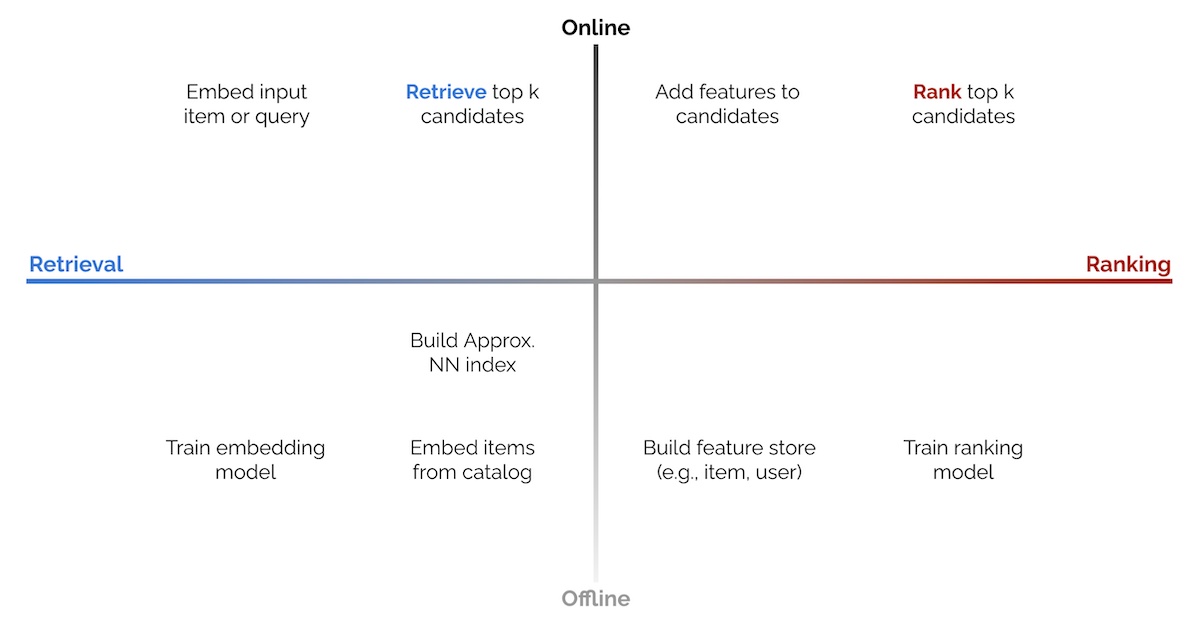

Baidu’s history with supercomputer-like systems for advertising stretches back to over a decade ago, when the company used a novel distributed regression model to determine the click-through rate (CTR) of advertising campaigns. These MPI-based algorithms gave way to nearest neighbor and maximum inner product searches just before AI/ML hit and has now evolved to include ultra-compressed CTR models running on large pools of GPU resources.

Weijie Zhao at the Cognitive Computing Lab at Baidu Research emphasizes the phrase “industrial scale” when talking about Baidu’s infrastructure to support advertising, noting that training datasets are at petabyte scale with hundreds of billions of training instances. Model sizes can reach over ten terabytes. Compute and network efficiency, but with these models storage definitely becomes a bottleneck, pushing the need for novel, serious compression/quantization.