Stable Flow: Vital Layers for Training-Free Image Editing

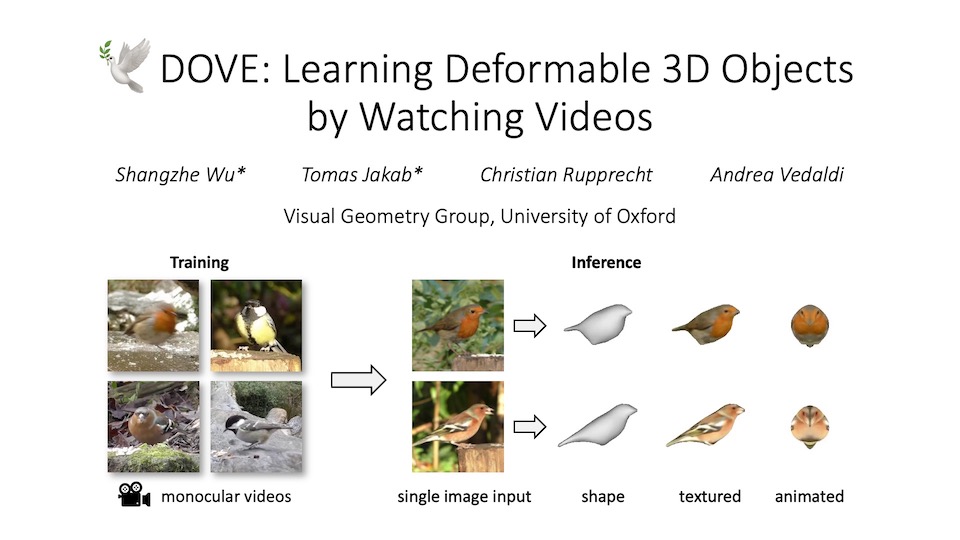

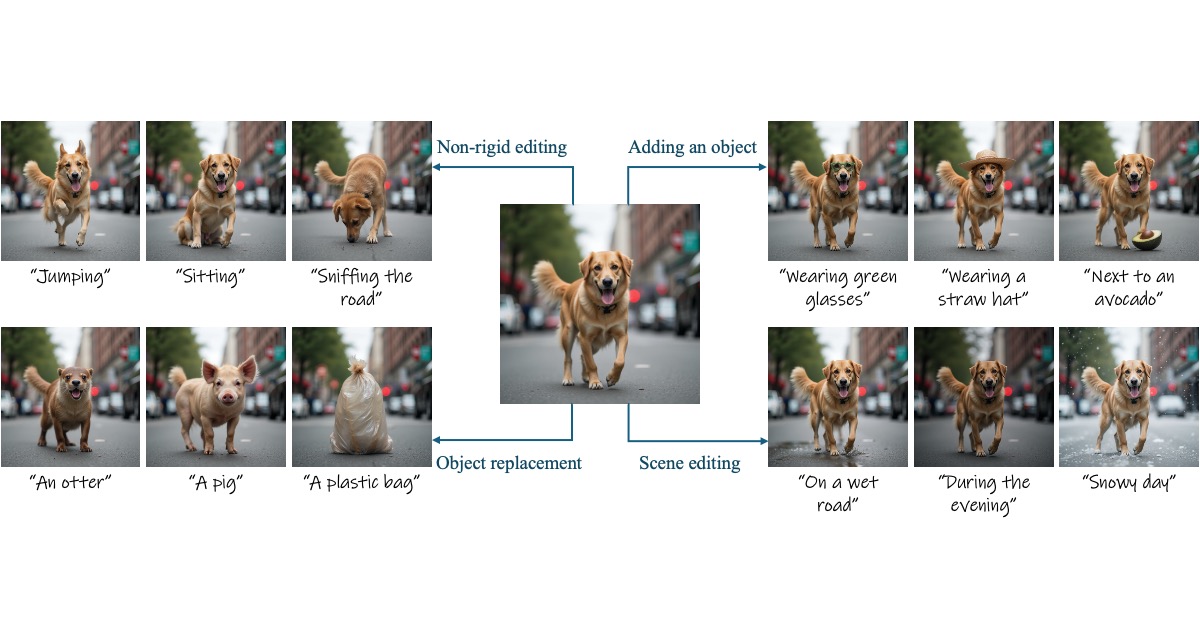

Diffusion models have revolutionized the field of content synthesis and editing. Recent models have replaced the traditional UNet architecture with the Diffusion Transformer (DiT), and employed flow-matching for improved training and sampling. However, they exhibit limited generation diversity. In this work, we leverage this limitation to perform consistent image edits via selective injection of attention features. The main challenge is that, unlike the UNet-based models, DiT lacks a coarse-to-fine synthesis structure, making it unclear in which layers to perform the injection. Therefore, we propose an automatic method to identify "vital layers" within DiT, crucial for image formation, and demonstrate how these layers facilitate a range of controlled stable edits, from non-rigid modifications to object addition, using the same mechanism. Next, to enable real-image editing, we introduce an improved image inversion method for flow models. Finally, we evaluate our approach through qualitative and quantitative comparisons, along with a user study, and demonstrate its effectiveness across multiple applications.

Specifically, we explore image editing via parallel generation, where features from the generative trajectory of the source (reference) image are injected into the trajectory of the edited image. Such an approach has been shown effective in the context of convolutional UNet-based diffusion models, where the roles of the different attention layers are well understood. However, such understanding has not yet emerged for DiT. Specifically, DiT does not exhibit the same fine-coarse-fine structure of the UNet, hence it is not clear which layers should be tampered with to achieve the desired editing behavior.