What can LLMs never do? - by Rohit Krishnan

Every time over the past few years that we came up with problems LLMs can’t do, they passed them with flying colours. But even as they passed them with flying colours, they still can’t answer questions that seem simple, and it’s unclear why.

And so, over the past few weeks I have been obsessed by trying to figure out the failure modes of LLMs. This started off as an exploration of what I found. It is admittedly a little wonky but I think it is interesting. The failures of AI can teach us a lot more about what it can do than the successes.

The starting point was bigger, the necessity for task by task evaluations for a lot of the jobs that LLMs will eventually end up doing. But then I started asking myself how can we figure out the limits of its ability to reason so that we can trust its ability to learn.

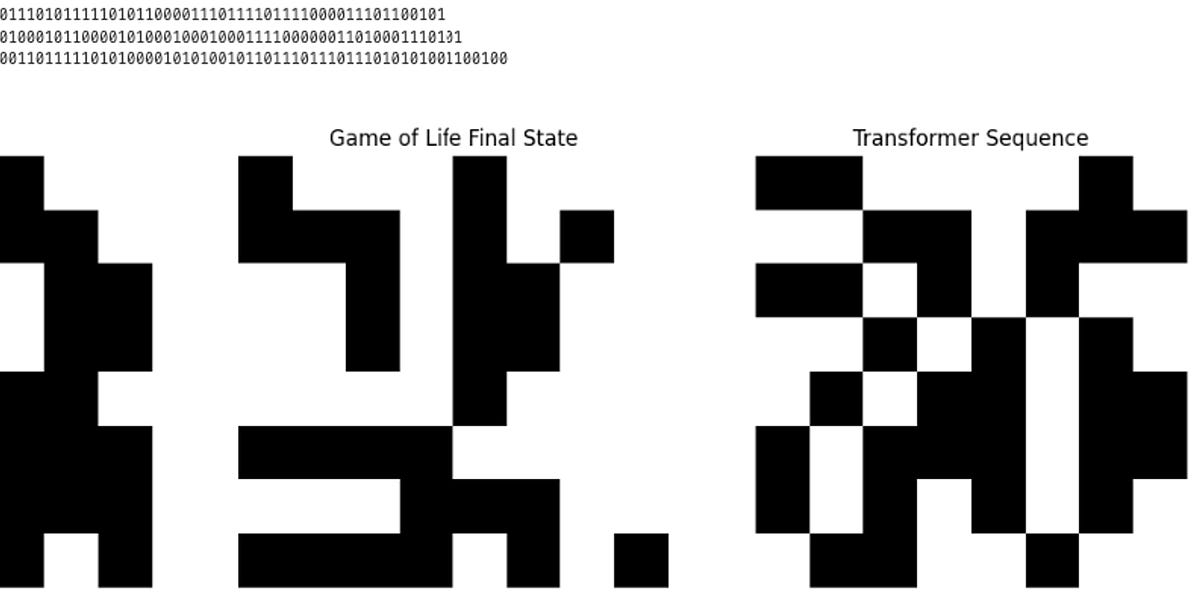

LLMs are hard to, as I've written multiple times, and their ability to reason is difficult to separate from what they're trained on. So I wanted to find a way to test its ability to iteratively reason and answer questions.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951502/VRG_Illo_STK172_L_Normand_JackDorsey_Neutral.jpg)