Why doesn't ML suffer from curse of dimensionality?

Stack Exchange network consists of 183 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Ask questions, find answers and collaborate at work with Stack Overflow for Teams. Explore Teams

Disclaimer: I asked this question on Data Science Stack Exchange 3 days ago, and got no response so far. Maybe it is not the right site. I am hoping for more positive engagement here.

This is a question that has puzzled me for a long time. I am a trained statistician and I know that certain things cannot be done in high dimensions (or at least you won't get what you want, you may get something else though).

There's this notion of curse of dimensionality. Density estimation, for example, is notoriously slow in high dimensions as the rate of convergence of kernel density estimate is $𝑛^{−2/(2+𝑑)}$ . Clearly as 𝑑→∞ , this rate basically behaves like a constant, and thus density estimation in high dimensions is essentially impossible. But we routinely see diffusion models and other methods being used in high dimensions. I am not really talking about theory here; rather they are used in Stable Diffusion, Dall-E, etc. which give good results!

Leave a Comment

Related Posts

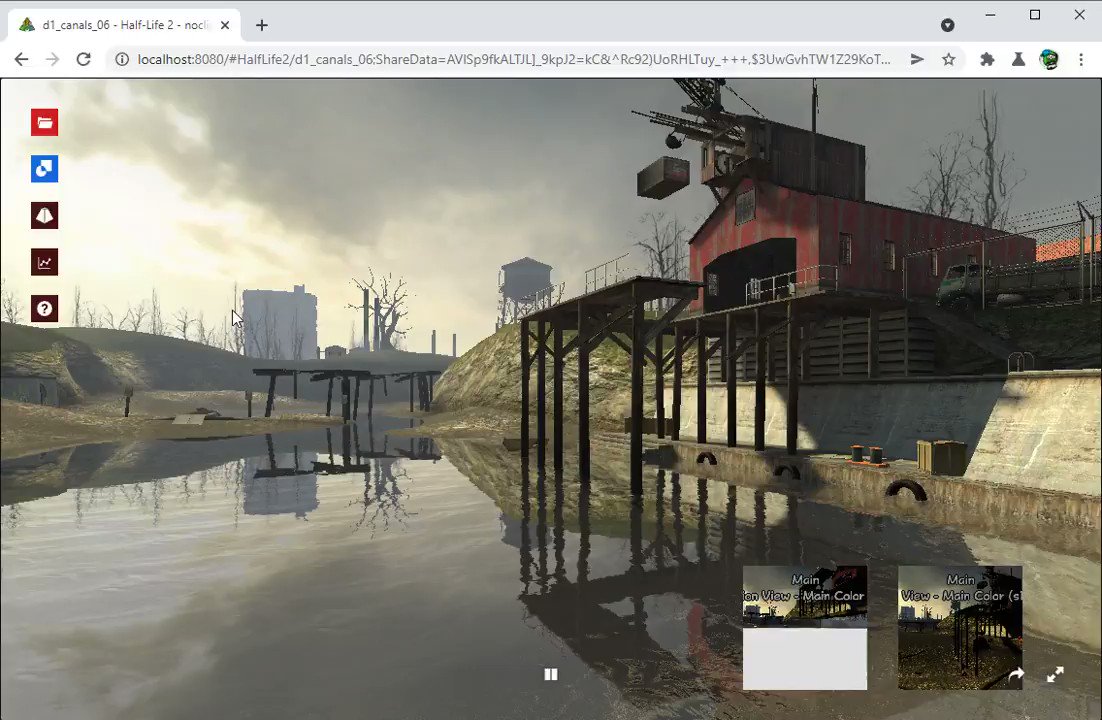

Jasper on Twitter: "got the Source Engine implementation running under latest WebGPU. these include some of the most complicated materials & shaders in noclip. it's exciting because it doesn't look any different!… https://t.co/wz27v8XfMm"

Comment