Tokenization in large language models, explained

In April, paying subscribers of the Counterfactual voted for a post explaining how tokenization works in LLMs. As always, feel free to subscribe if you’d like to vote on future post topics; however, the posts themselves will always be made publicly available.

Large Language Models (LLMs) like ChatGPT are often described as being trained to predict the next word. Indeed, that’s how I described them in my explainer with Timothy Lee.

The Counterfactual is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

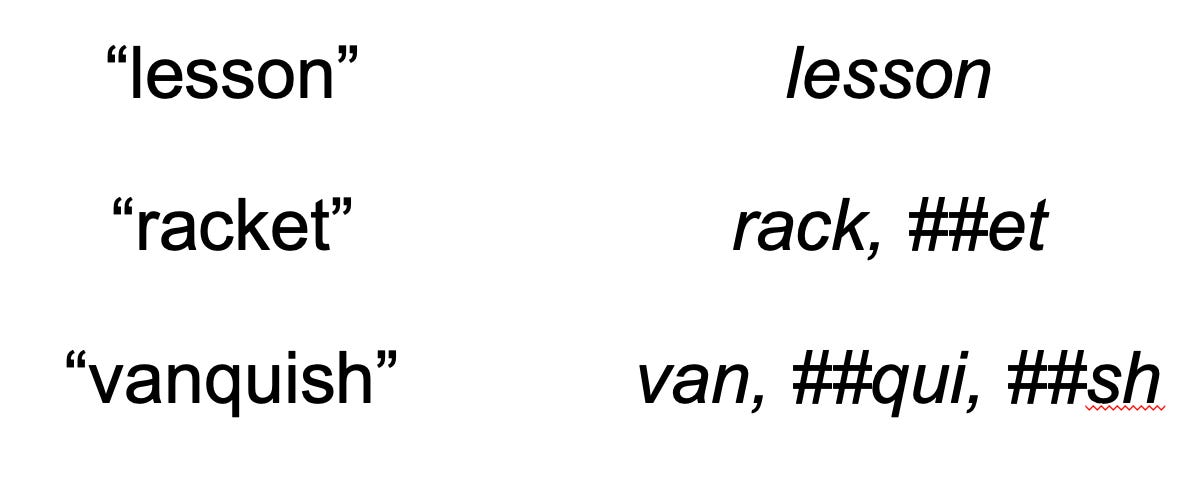

But that’s not exactly right. Technically, modern LLMs are trained to predict something called tokens. Sometimes “tokens” are the same thing as words, but sometimes they’re not—it depends on the kind of tokenization technique that is used. This distinction between tokens and words is subtle and requires some additional explanation, which is why it’s often elided in descriptions of how LLMs work more generally.

But as I’ve written before, I do think tokenization is an important concept to understand if you want to understand the nuts and bolts of modern LLMs. In this explainer, I’ll start by giving some background on what tokenization is and why people do it. Then, I’ll talk about some of the different tokenization techniques out there, and how they relate to research on morphology in human language. Finally, I’ll discuss some of the recent research that’s been done on tokenization and how that impacts the representations that LLMs learn.