AgentEval: A Framework for Evaluating LLM Applications | Sebastian Gutierrez

Traditional success metrics (did it work or not?) are insufficient for understanding the full utility of LLM applications, especially when success isn’t clearly defined.

Microsoft Research’s AgentEval framework proposes a more nuanced evaluation approach, using LLMs to help assess system utility.

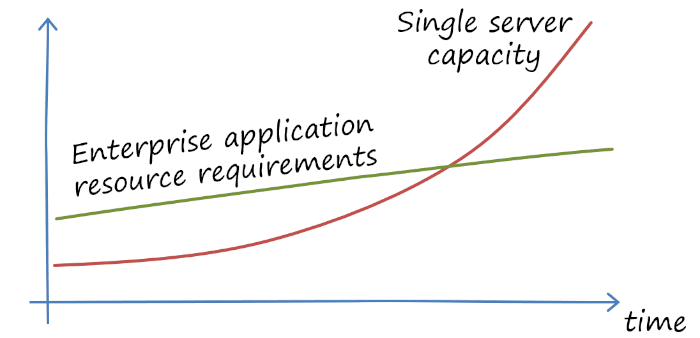

While these frameworks and patterns are valuable, their real significance becomes clear when considering them in the context of AI engineering system evolution.

Much like how software testing evolved from simple pass/fail to comprehensive test suites, LLM evaluation needs to mature beyond basic success metrics.

AgentEval’s approach of using LLMs to evaluate LLMs is fascinating as it’s a practical application of using AI capabilities to solve AI-specific challenges.

Teams that implement these evaluation frameworks early will be better positioned to build reliable, production-grade LLM applications.