Efficient GEMM Kernel Designs with Pipelining

General Matrix Multiplication (GEMM) is a fundamental operation in machine learning and scientific computing. It is the classic example of an algorithm that benefits greatly from GPU acceleration due to its high degree of data parallelism. More recently, efficient GEMM implementations on modern GPU accelerators have proven crucial to enabling contemporary advances in large language models (LLMs). Conversely, the explosive growth of LLMs has driven investment into GPUs’ raw hardware capabilities as well as architectural innovations, with GEMM as the yardstick by which GPU performance is measured. As such, understanding GEMM optimization can inform a deeper understanding of modern accelerators.

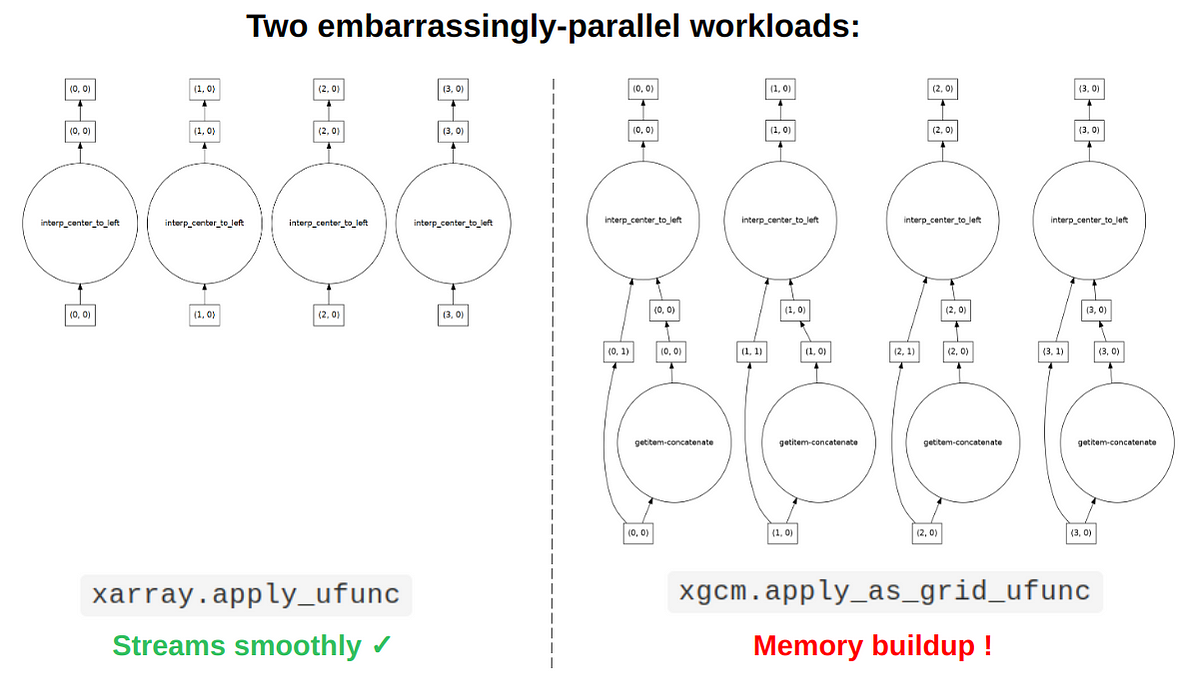

In this tutorial on GEMM optimization, we discuss some practical aspects of orchestrating a pipeline of data in order to efficiently feed the GPU’s tensor cores. In the context of GEMM kernel design, pipelining refers to the idea of overlapping copy and matrix-multiply-accumulate (MMA) operations through maintaining multiple data buffers. We focus on the NVIDIA Hopper architecture as it features highly robust and programmable asynchronous execution capabilities that we can leverage for effective pipelining. In general, there are two pipelining strategies that are effective on the Hopper architecture:

Using tools from the CUTLASS library, we will explain how to write a GEMM kernel that uses a multistage design, paying careful attention to how to write the necessary synchronization logic using the CUTLASS Pipeline classes. A broader discussion, including that of warp-specialization and a performance evaluation, can be found in our original article on the Colfax Research website.

Leave a Comment

Related Posts

/cdn.vox-cdn.com/uploads/chorus_asset/file/25677371/Switch_PokemonSwordPokemonShield_ExpansionPass_screen_06.jpg)