Anthropic looks to fund a new, more comprehensive generation of AI benchmarks

Anthropic is launching a program to fund the development of new types of benchmarks capable of evaluating the performance and impact of AI models, including generative models like its own Claude.

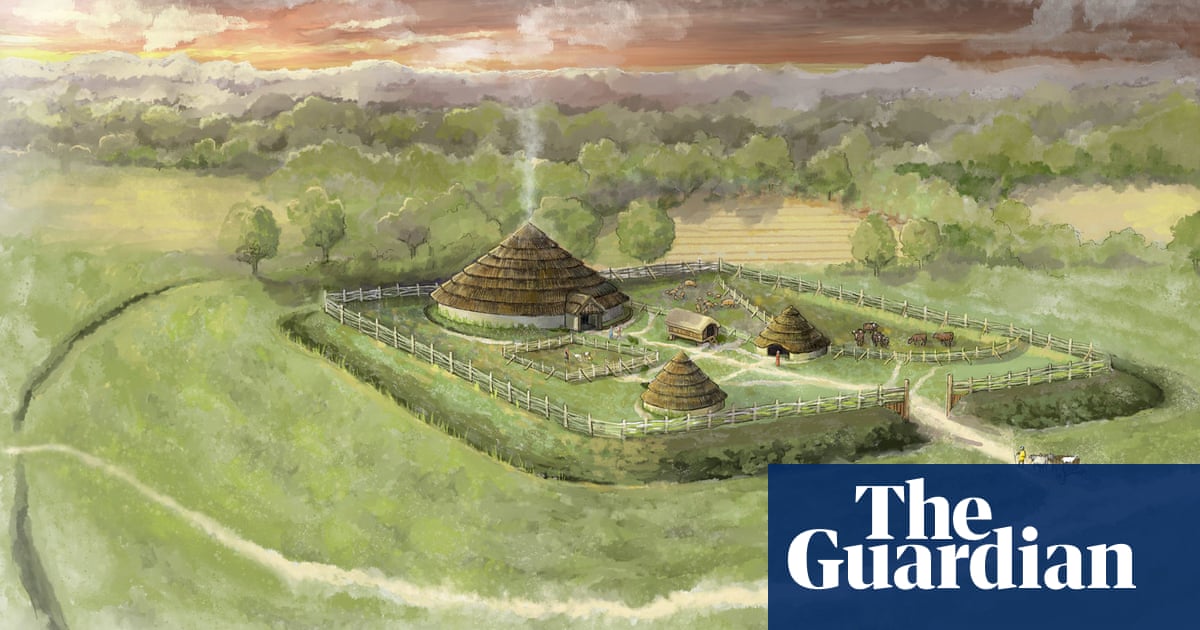

Unveiled on Monday, Anthropic’s program will dole out payments to third-party organizations that can, as the company puts it in a blog post, “effectively measure advanced capabilities in AI models.” Those interested can submit applications to be evaluated on a rolling basis.

“Our investment in these evaluations is intended to elevate the entire field of AI safety, providing valuable tools that benefit the whole ecosystem,” Anthropic wrote on its official blog. “Developing high-quality, safety-relevant evaluations remains challenging, and the demand is outpacing the supply.”

As we’ve highlighted before, AI has a benchmarking problem. The most commonly cited benchmarks for AI today do a poor job of capturing how the average person actually uses the systems being tested. There are also questions as to whether some benchmarks, particularly those released before the dawn of modern generative AI, even measure what they purport to measure, given their age.