Thonk From First Principles

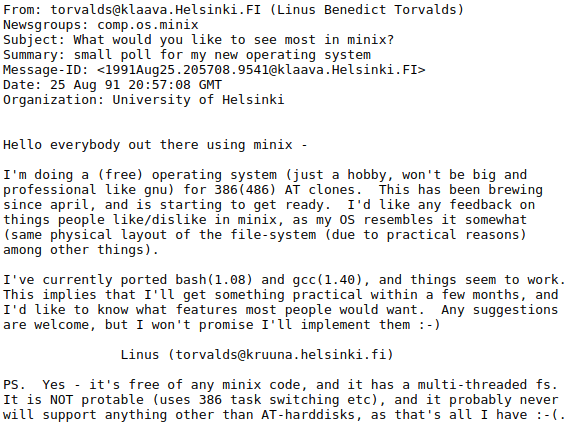

It’s 2022. I check out this cool new project, CUTLASS, with very fast matmuls. I take a large matmul, 8192 x 8192 x 8192, and benchmark it in PyTorch, which calls CuBLAS.

!!! 10% higher perf? That’s incredible. CuBLAS is highly optimized for large compute-bound matmuls, and somehow CUTLASS + autotuning is outperforming it by 10%? We gotta start using these matmuls yesterday.

Somehow, in the light of Python, all of CUTLASS’s performance gains disappear. This in of itself is not shocking - it’s notoriously difficult to ensure consistent benchmarking across setups.

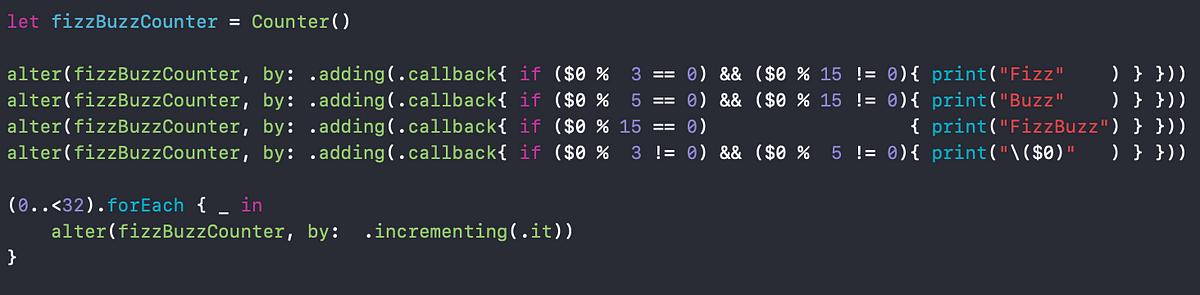

I tediously ablate the two benchmark scripts, until finally, I find that CUTLASS’s profiler, by default, actually initializes the values in a fairly strange way - it only initializes the inputs with integers. Confused about whether this matters, I try:

What? How could the values of the matrix affect the runtime of the model? I know Nvidia has some weird data compression thing on A100s, but I wouldn’t have expected that to be on in matmuls. Let’s try some other data distributions, like an uniform distribution [0,1].