How do Large Language Models “think”? - by Thomas Voice

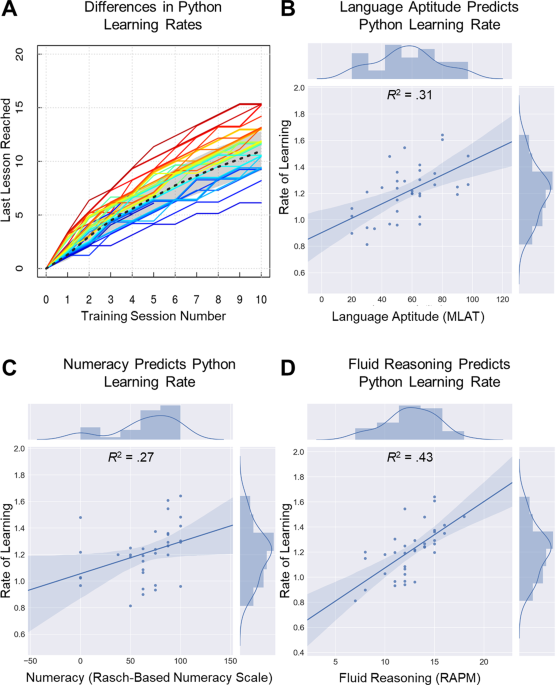

Recently I’ve been experimenting with large language models (LLMs), looking into their mathematical reasoning capability. Moderns LLMs like ChatGPT sometimes show remarkable abilities to solve maths problems. Of all the impressive things LLMs can do, mathematics stands out as being especially abstract and complex. However, there’s been some skepticism about whether LLMs can answer problems in general or if instead they just memorise specific answers. The reality is complicated, and there seems to be a blurry line between these two possibilities.

To get some idea of how these models think, we can look at the architecture. Modern large language models predominantly use transformer decoder neural networks. The input is some text, broken up into tokens (usually a word or symbol each), which the model adds to by repeatedly predicting what the next token should be.

The input goes through several layers of neural networks. At each layer, per-token information is transformed in parallel and the results are cross-referenced against each other, before being further processed. This means, when interpreting a word, the model can detect relevant words nearby - e.g. “share”, “agreement” or “carnival” near the word “fair”. Similar words have similar reference information, so the model can look for general patterns of words. Information about detected patterns is further cross-referenced at the next layer, letting the model detect patterns of patterns, and so on, into more abstraction. The model uses this information when predicting the next token, and must attempt to continue the existing patterns in a consistent way. When LLMs generate text, they can take into account highly subtle information, such as meanings of sentences, topics of paragraphs, writing style, mood, tone, emotion, character motivations - and mathematical reasoning.

/cloudfront-us-east-2.images.arcpublishing.com/reuters/GGMGHBONFRM6TCFALZQYWYSVRE.jpg)