Scaling an ML Feature Store From 1M to 1B Features per Second

We’re so glad you’re here. You can expect all the best TNS content to arrive Monday through Friday to keep you on top of the news and at the top of your game.

The demand for low-latency machine learning feature stores is higher than ever, but actually implementing one at scale remains a challenge. That became clear when ShareChat engineers Ivan Burmistrov and Andrei Manakov took the P99 CONF 23 stage to share how they built a low-latency ML feature store based on ScyllaDB.

This isn’t a tidy case study where adopting a new product saves the day. It’s a “lessons learned” story, a look at the value of relentless performance optimization — with some important engineering takeaways.

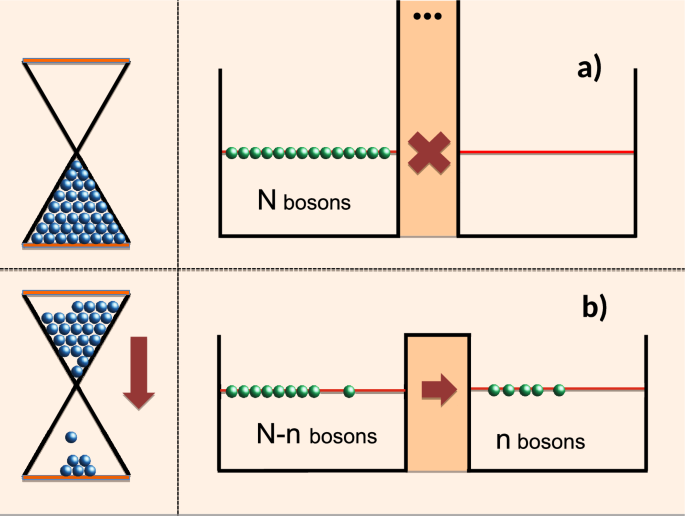

The original system implementation fell far short of the company’s scalability requirements. The ultimate goal was to support 1 billion features per second, but the system failed under a load of just 1 million. With some smart problem solving, the team pulled it off though. Let’s look at how their engineers managed to pivot from the initial failure to meet their lofty performance goal without scaling the underlying database.

Obsessed with performance optimizations and low-latency engineering? Join your peers at P99 CONF 24, a free, highly technical virtual conference on “all things performance.” Speakers include:

,co_rgb:ffffff,c_fit,w_1000,h_300/fl_layer_apply,g_south_west,x_100,y_160/l_text:Inter-Regular.ttf_40:Adam Silver,co_rgb:eff6ff,c_fit,w_1400/fl_layer_apply,g_south_west,x_100,y_90/card5.png)