xAI picked Ethernet over InfiniBand for its H100 Colossus training cluster

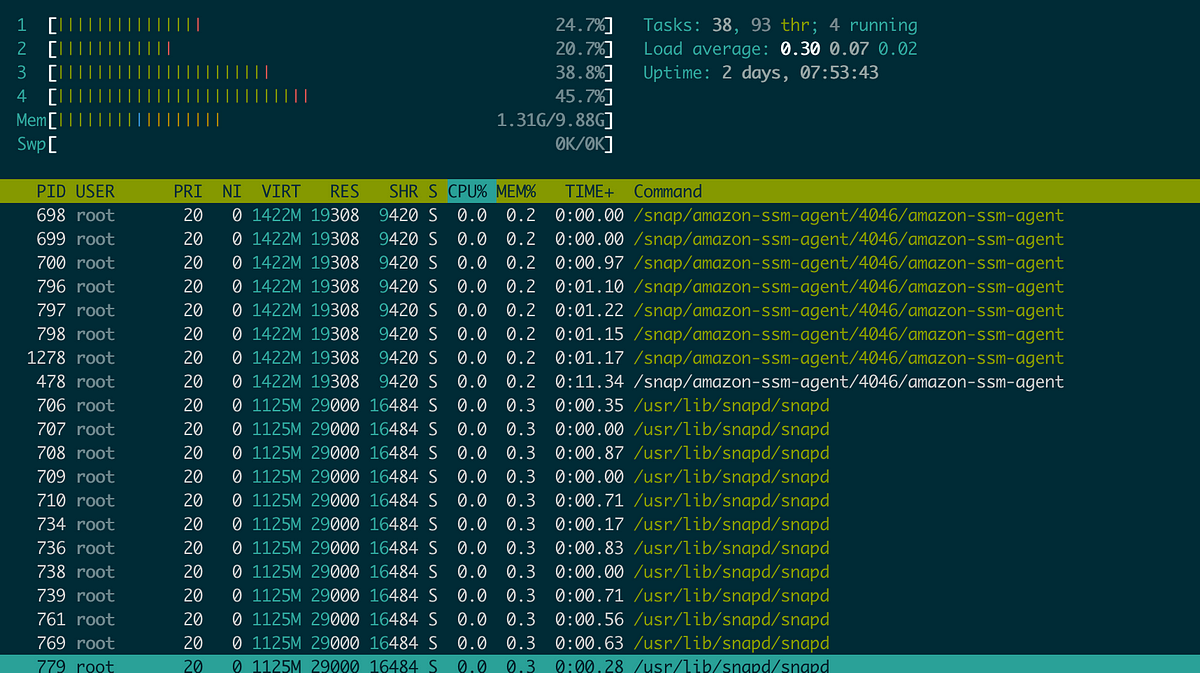

Unlike most AI training clusters, xAI's Colossus with its 100,000 Nvidia Hopper GPUs doesn't use InfiniBand. Instead, the massive system, which Nvidia bills as the "world's largest AI supercomputer," was built using the GPU giant's Spectrum-X Ethernet fabric.

Colossus was built to train xAI's Grok series of large language models, which power the chatbot built into Elon Musk's echo chamber colloquially known as Tw..., right, X.

The system as a whole is massive, boasting more than 2.5 times the number of GPUs compared to the US' number one ranked Frontier supercomputer at Oak Ridge National Laboratory with its nearly 38,000 AMD MI250X accelerators. Perhaps more impressively, Colossus was deployed in just 122 days and took 19 days to go from first deployment to training.

In terms of peak performance, the xAI cluster boasts 98.9 exaFLOPS of dense FP/BF16 — double that if xAI's models can take advantage of sparsity during training, and double that again to 395 exaFLOPS when training at sparse FP8 precision.

.png)