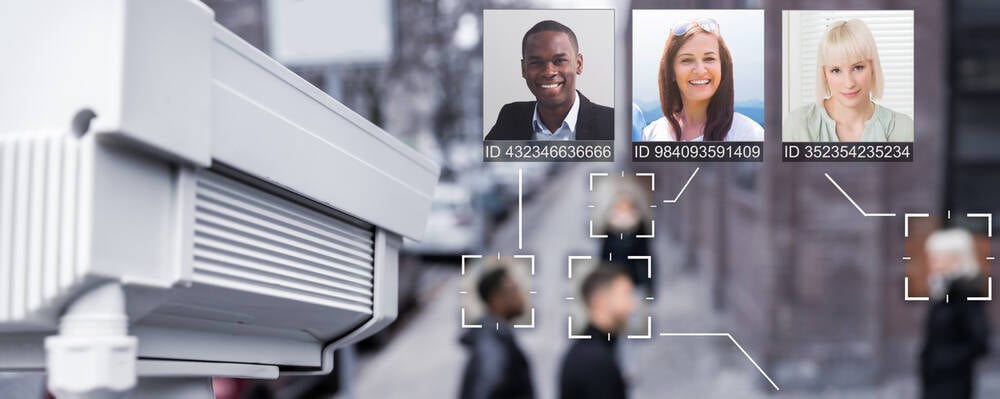

Watchdog finds AI tools can be used unlawfully to filter candidates by race, gender

The UK's data protection watchdog finds that AI recruitment technologies can filter candidates according to protected characteristics including race, gender, and sexual orientation.

The Information Commissioner's Office also said that in trying to guard against unfair bias in recruitment, AI tools could then infer such characteristics based on information in the candidate's application. Such inferences were not enough to monitor bias effectively, and they were often processed without a lawful basis and without the candidate's knowledge, the ICO said.

The findings are part of an audit [PDF] of organizations that develop or provide AI-powered recruitment tools between August 2023 to May 2024.

In a prepared statement, Ian Hulme, ICO director of assurance, said: "AI can bring real benefits to the hiring process, but it also introduces new risks that may cause harm to jobseekers if it is not used lawfully and fairly. Our intervention has led to positive changes by the providers of these AI tools to ensure they are respecting people's information rights.

"Our report signals our expectations for the use of AI in recruitment, and we're calling on other developers and providers to also action our recommendations as a priority. That's so they can innovate responsibly while building trust in their tools from both recruiters and jobseekers."

:focal(0x0:3000x2000)/static.texastribune.org/media/files/a2eb1e91017143006fc84dffc91a9e89/Lake%20Texoma%20LS%20TT%2001.jpg)