/cdn.vox-cdn.com/uploads/chorus_asset/file/25441105/20240430_Holographic_AR_Displays_N6A1077.jpg)

Did Stanford just prototype the future of AR glasses?

By Umar Shakir , a news writer fond of the electric vehicle lifestyle and things that plug in via USB-C. He spent over 15 years in IT support before joining The Verge.

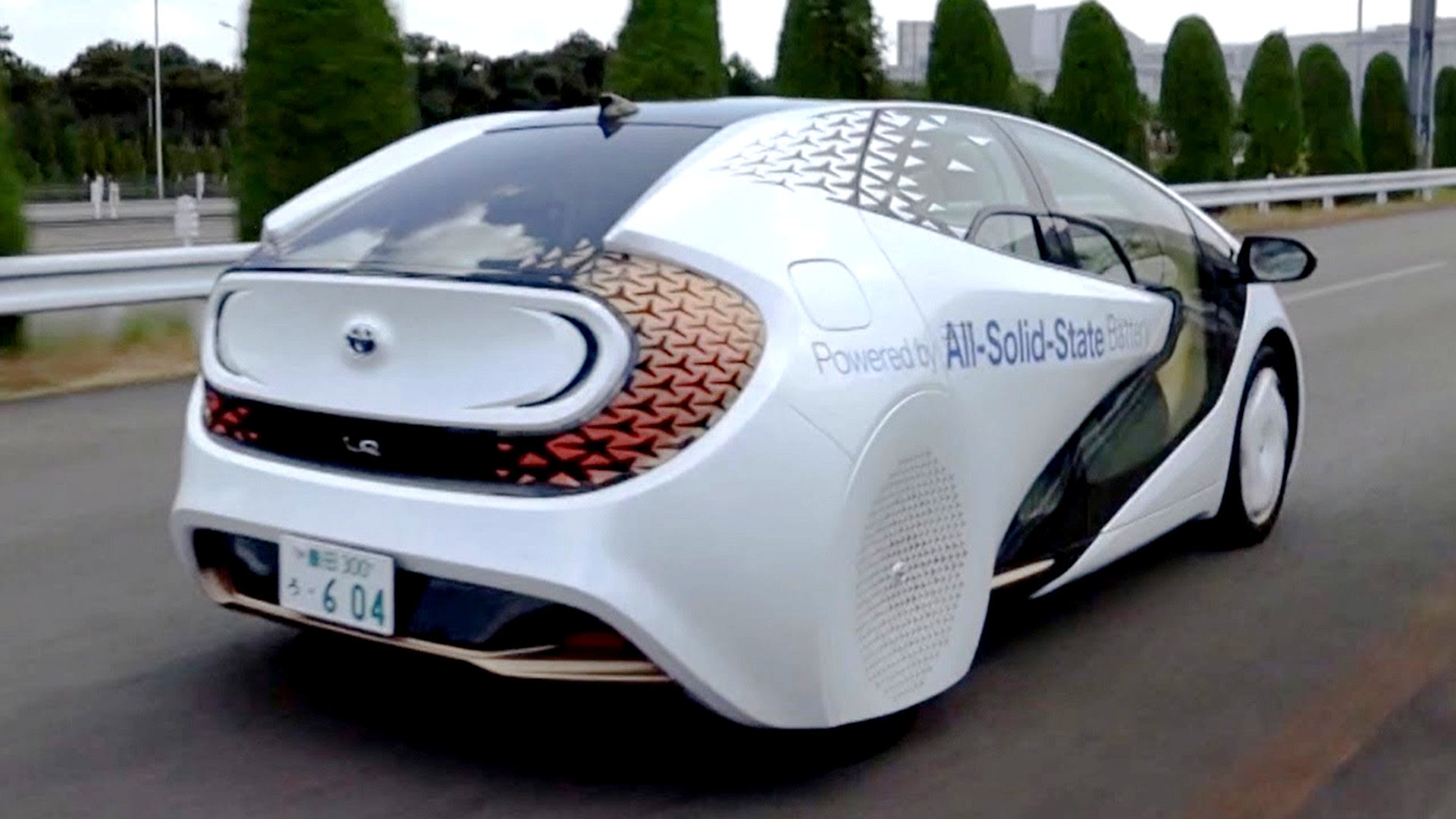

A research team at Stanford is developing a new AI-assisted holographic imaging technology it claims is thinner, lighter, and higher quality than anything its researchers have seen. Could it take augmented reality (AR) headsets to the next level?

For now, the lab version has an anemic field of view — just 11.7 degrees in the lab, far smaller than a Magic Leap 2 or even a Microsoft HoloLens.

But Stanford’s Computational Imaging Lab has an entire page with visual aid after visual aid that suggests it could be onto something special: a thinner stack of holographic components that could nearly fit into standard glasses frames, and be trained to project realistic, full-color, moving 3D images that appear at varying depths.

Like other AR eyeglasses, they use waveguides, which are a component that guides light through glasses and into the wearer’s eyes. But researchers say they’ve developed a unique “nanophotonic metasurface waveguide” that can “eliminate the need for bulky collimation optics,” and a “learned physical waveguide model” that uses AI algorithms to drastically improve image quality. The study says the models “are automatically calibrated using camera feedback”.