Don't Worry About the Vase

At this point, we can confidently say that no, capabilities are not hitting a wall. Capacity density, how much you can pack into a given space, is way up and rising rapidly, and we are starting to figure out how to use it.

Not only did we get o1 and o1 pro and also Sora and other upgrades from OpenAI, we also got Gemini 1206 and then Gemini Flash 2.0 and the agent Jules (am I the only one who keeps reading this Jarvis?) and Deep Research, and Veo, and Imagen 3, and Genie 2 all from Google. Meta’s Llama 3.3 dropped, claiming their 70B is now as good as the old 405B, and basically no one noticed.

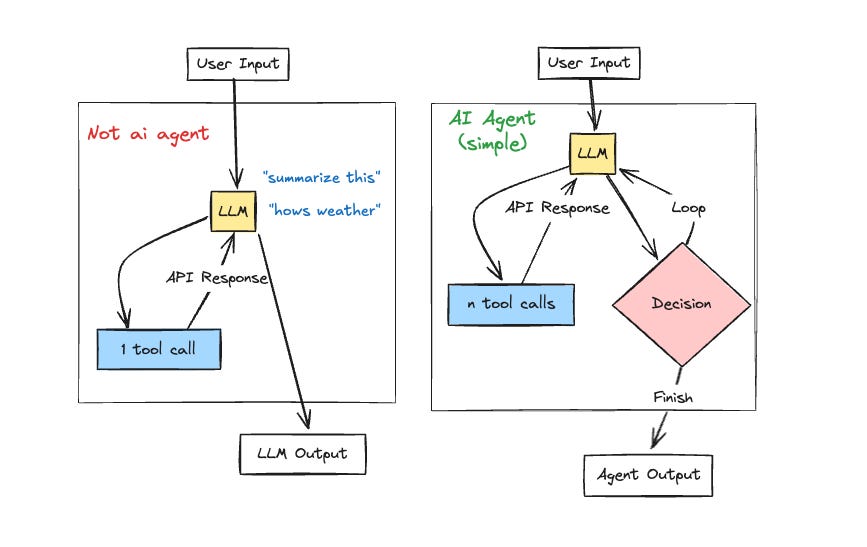

This morning I saw Cursor now offers ‘agent mode.’ And hey there, Devin. And Palisade found that a little work made agents a lot more effective.

Tomorrow I will post about the o1 Model Card, then next week I will follow up regarding what Apollo found regarding potential model scheming. I plan to get to Google Flash after that, which should give people time to try it out. For now, this post won’t cover any of that.