Don't Worry About the Vase

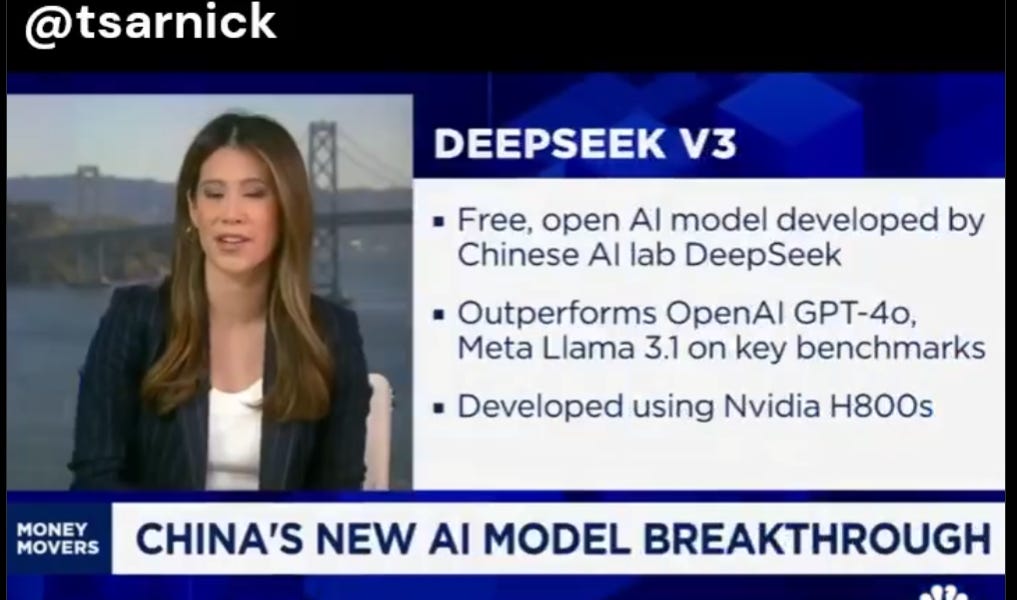

DeepSeek v3 seems to clearly be the best open model, the best model at its price point, and the best model with 37B active parameters, or that cost under $6 million.

Anecdotal reports and alternative benchmarks tells us it’s not as good as Claude Sonnet, but it is plausibly on the level of GPT-4o.

The big thing they did was use only 37B active tokens, but 671B total parameters, via a highly aggressive mixture of experts (MOE) structure.

They used Multi-Head Latent Attention (MLA) architecture and auxiliary-loss-free load balancing, and complementary sequence-wise auxiliary loss.

They designed everything to be fully integrated and efficient, including together with the hardware, and claim to have solved several optimization problems, including for communication and allocation within the MOE.

They used their internal o1-style reasoning model for synthetic fine tuning data. Essentially all the compute costs were in the pre-training step.