Update #4: OpenAI's Copilot and DeepMind on Reward and AGI

Welcome to the fourth Update from the Gradient1! If you were referred by a friend, we’d love to see you subscribe and follow us on Twitter!

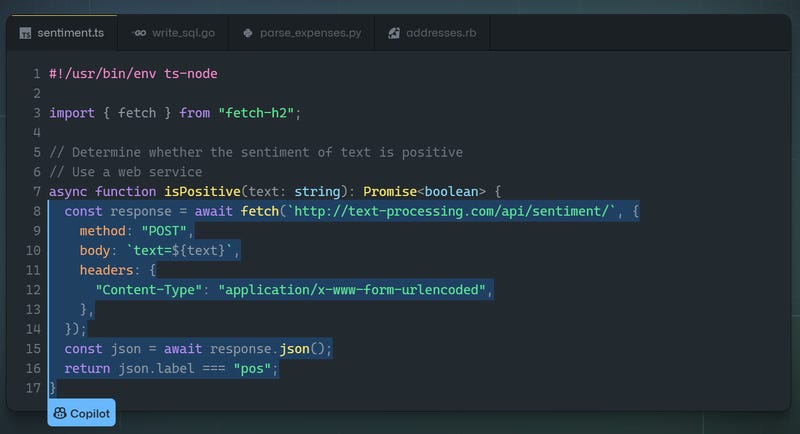

Summary The AI and CS communities alike were taken aback by the announcement of the “GitHub CoPilot” at the end of June. CoPilot provides AI-powered code-completion, making it possible to have it take over to finish lines of code or even write entire functions given only the corresponding documentation comment. While code completion is a standard feature of programming tools, it is typically limited to filling in function or variable names, whereas CoPilot can complete many lines or whole functions based on what came before them.

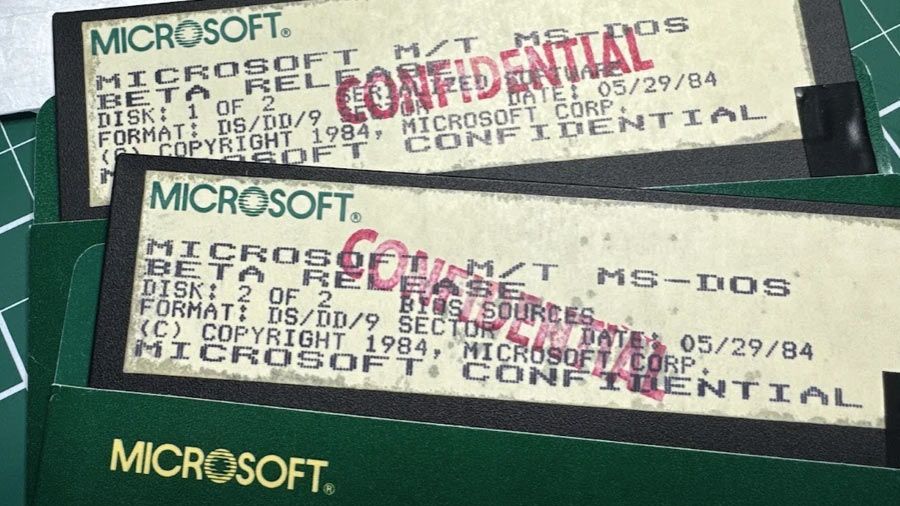

CoPilot is powered by an OpenAI GPT-3 model fine-tuned on billions of lines of public code from GitHub, which OpenAI named Codex. Codex is almost exactly the same as GPT-3, with the main distinction being that it was trained (either from scratch or by fine-tuning from a pre-trained model) on code, as later described by OpenAI in the paper Evaluating Large Language Models Trained on Code. As with GPT-3, a key aspect of their approach is to train the model with huge amounts of data:

“Our training dataset was collected in May 2020 from 54 million public software repositories hosted on GitHub, contain-ing 179 GB of unique Python files under 1 MB. We filtered out files which were likely auto-generated, had average line length greater than 100, had maximum line length greater than 1000, or contained a small percentage of alphanumeric characters. After filtering, our final dataset totaled 159 GB.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/24801728/Screenshot_2023_07_21_at_1.45.12_PM.jpeg)