We Asked 100+ AI Models to Write Code. Here’s How Many Failed Security Tests.

If you think AI-generated code is saving time and boosting productivity, you’re right. But here’s the problem: it’s also introducing security vulnerabilities… a lot of them. In our new 2025 GenAI Code Security Report, we tested over 100 large language models across Java, Python, C#, and JavaScript. The goal? To see if today’s most advanced AI systems can write secure code.

Unfortunately, the state of AI-generated code security in 2025 is worse than you think. What we found should be a wake-up call for developers, security leaders, and anyone relying on AI to move faster.

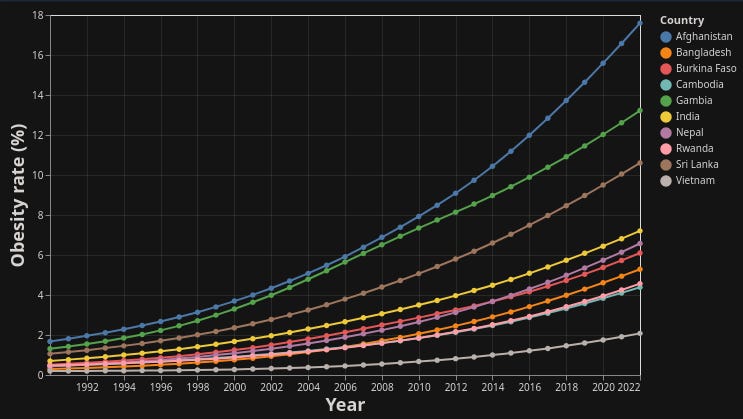

Figure 2: Security Pass Rate vs LLM Release Date, Stratified by Language Other major languages didn’t fare much better:

These weren’t obscure, edge-case vulnerabilities, either. In fact, one of the most frequent issues was: Cross-Site Scripting (CWE-80): AI tools failed to defend against it in 86% of relevant code samples.