Class action lawsuit on AI-related discrimination reaches final settlement

Mary Louis’ excitement to move into an apartment in Massachusetts in the spring of 2021 turned to dismay when Louis, a Black woman, received an email saying that a “third-party service” had denied her tenancy.

That third-party service included an algorithm designed to score rental applicants, which became the subject of a class action lawsuit, with Louis at the helm, alleging that the algorithm discriminated on the basis of race and income.

A federal judge approved a settlement in the lawsuit, one of the first of it’s kind, on Wednesday, with the company behind the algorithm agreeing to pay over $2.2 million and roll back certain parts of it’s screening products that the lawsuit alleged were discriminatory.

The settlement does not include any admissions of fault by the company SafeRent Solutions, which said in a statement that while it “continues to believe the SRS Scores comply with all applicable laws, litigation is time-consuming and expensive.”

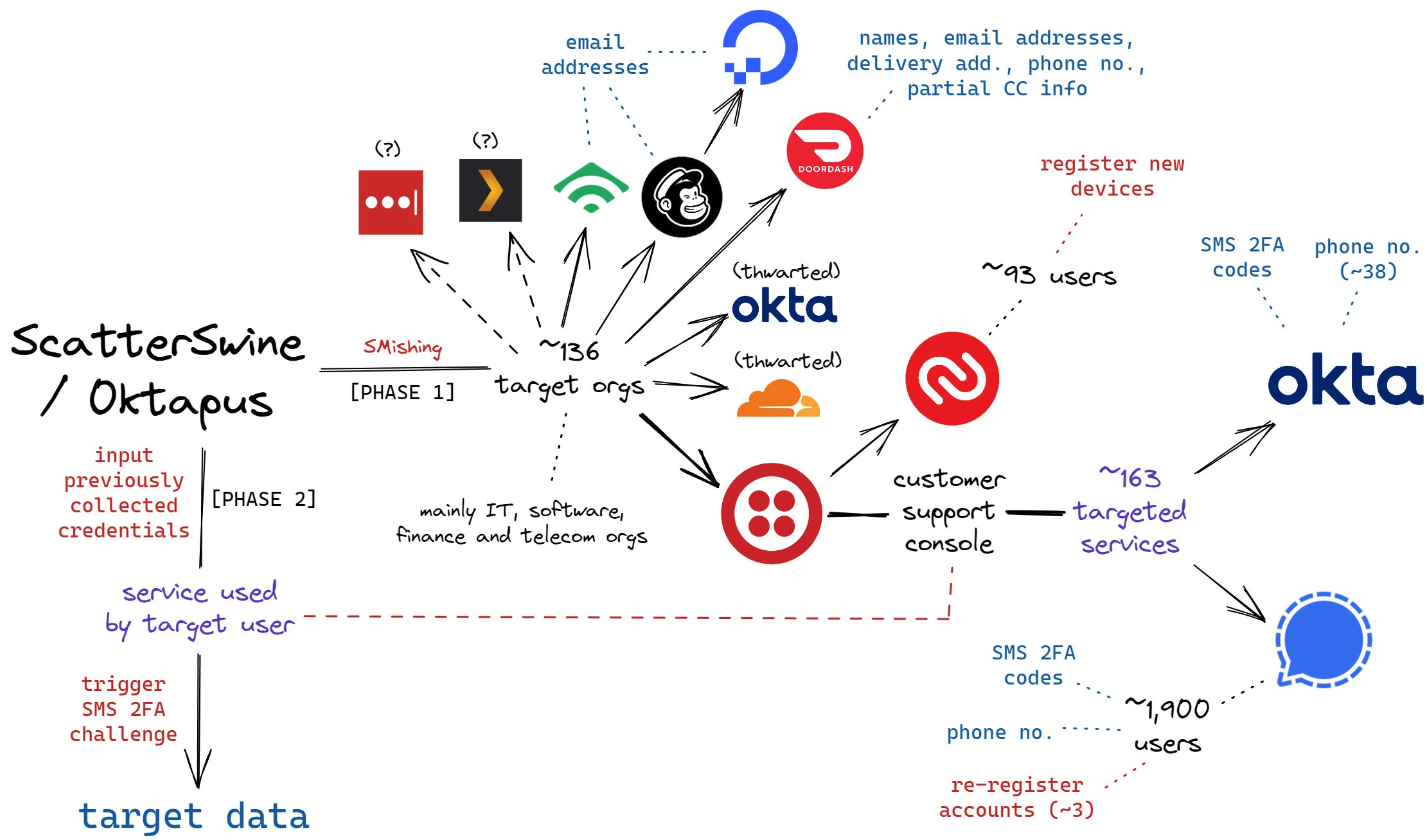

While such lawsuits might be relatively new, the use of algorithms or artificial intelligence programs to screen or score Americans isn’t. For years, AI has been furtively helping make consequential decisions for U.S. residents.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25745083/copilotplusprompt.jpeg)