Going Big and Small for 2025

2024 marked a significant evolution in Burn's architecture. Traditional deep learning frameworks often require developers to compromise between performance, portability, and flexibility; we aimed to transcend these trade-offs. Looking ahead to 2025, we are committed to applying this philosophy across the entire computing stack, encompassing everything from embedded devices to data centers.

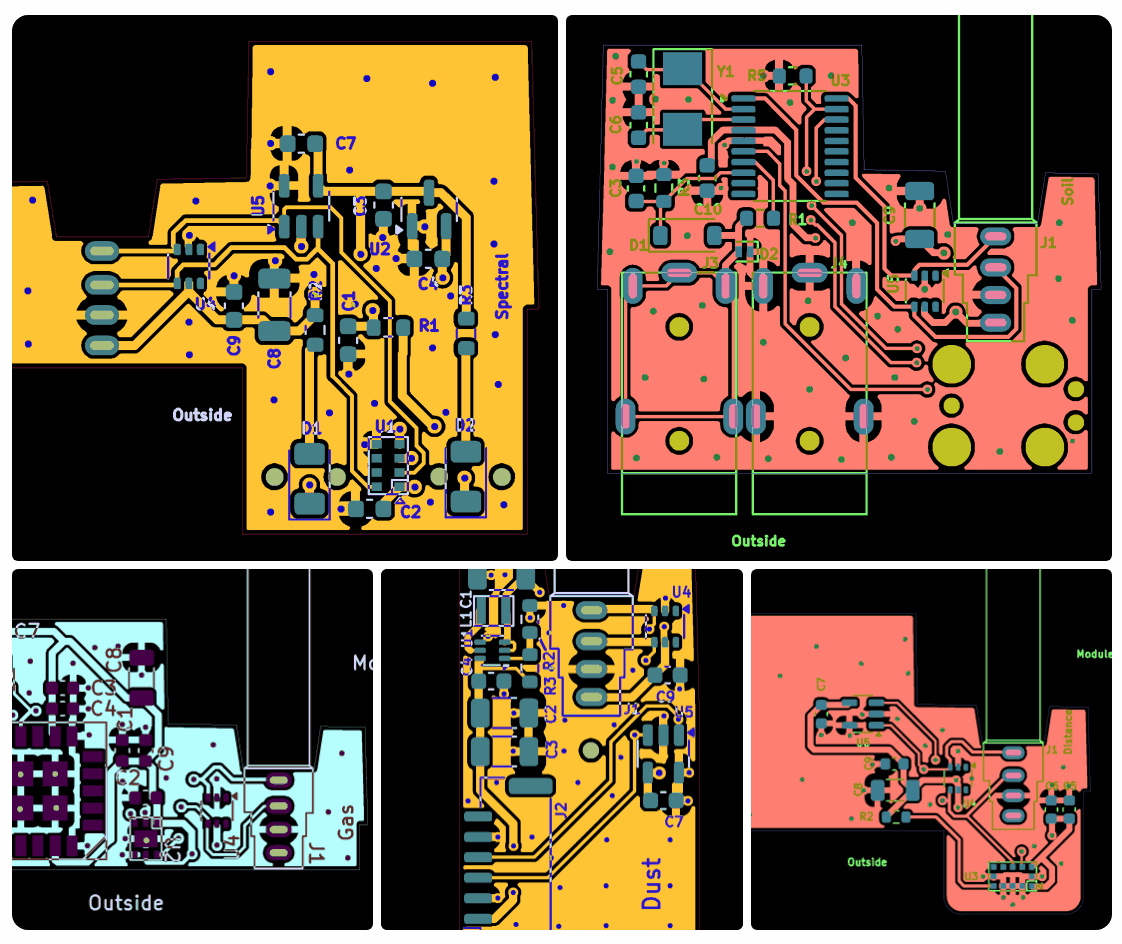

This year started with a limitation: our WGPU backend depended on basic WGSL templates, restricting our adaptability. This challenge led to the creation of CubeCL [1] , our solution to unified kernel development. The task was complex – designing one abstraction to fit across diverse hardware while sustaining top performance. Our results have proven our strategy, with performance now matching or outstripping LibTorch in the majority of our benchmarks.

The backend ecosystem now includes CUDA [2] , HIP/ROCm [3] and an advanced WGPU implementation supporting both WebGPU and Vulkan [4] . The most notable achievement to date is reaching performance parity across different backends on identical hardware. For example, matrix multiplication operations exhibit nearly identical performance whether executed on CUDA or Vulkan, directly reflecting our strategy of platform-agnostic optimization.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23262657/VRG_Illo_STK001_B_Sala_Hacker.jpg)