RWKV Open Source Development Blog

We are releasing RWKV-v5 Eagle v2, licensed under Apache 2.0, which can be used personally or commercially without restrictions.

Use our reference pip inference package, or any other community inference options ( Desktop App, RWKV.cpp, etc), and use it anywhere (even locally)

The original EagleX 7B 1.7T, trained by Recursal AI, made history as the first sub-quadratic model, to pass llama2 7B 2T on average in English eval.

The following report follows the same general format of the 1.7T model release, in eval details - to make direct comparision easier.

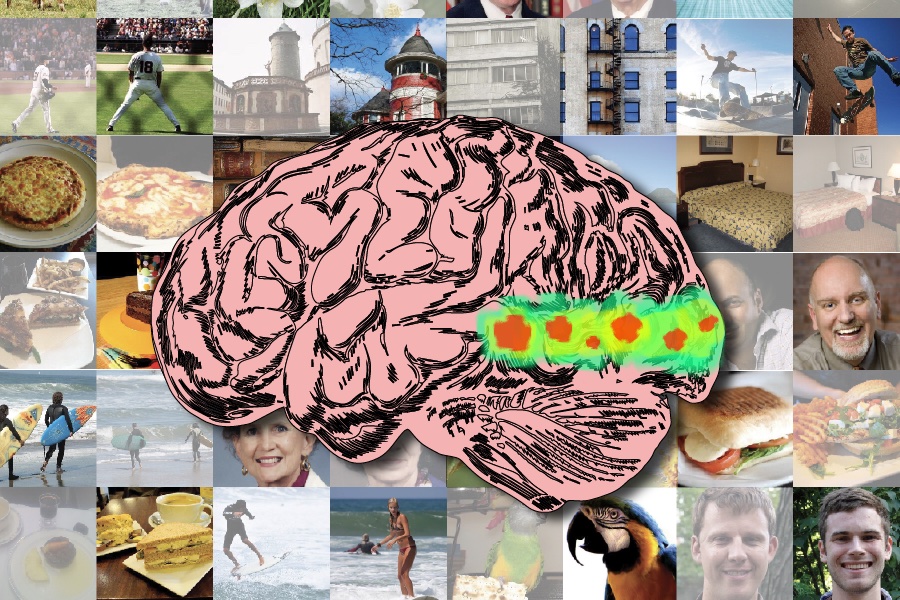

We start with the basics: Perplexity. Which is the loss value against the test dataset (lower score = better), i.e. how good the model is with the next token prediction.

Why do experts care about perplexity? Eval in general can be very subjective, and opinion-driven, and commonly give mixed results. Perplexity in a way gives the TLDR summary for most experts to start with

EagleX maintains the lead for best-in-class multi-lingual performance, with the incremental improvements we’re making to the Eagle line of models.