Prompt injection engineering for attackers: Exploiting GitHub Copilot

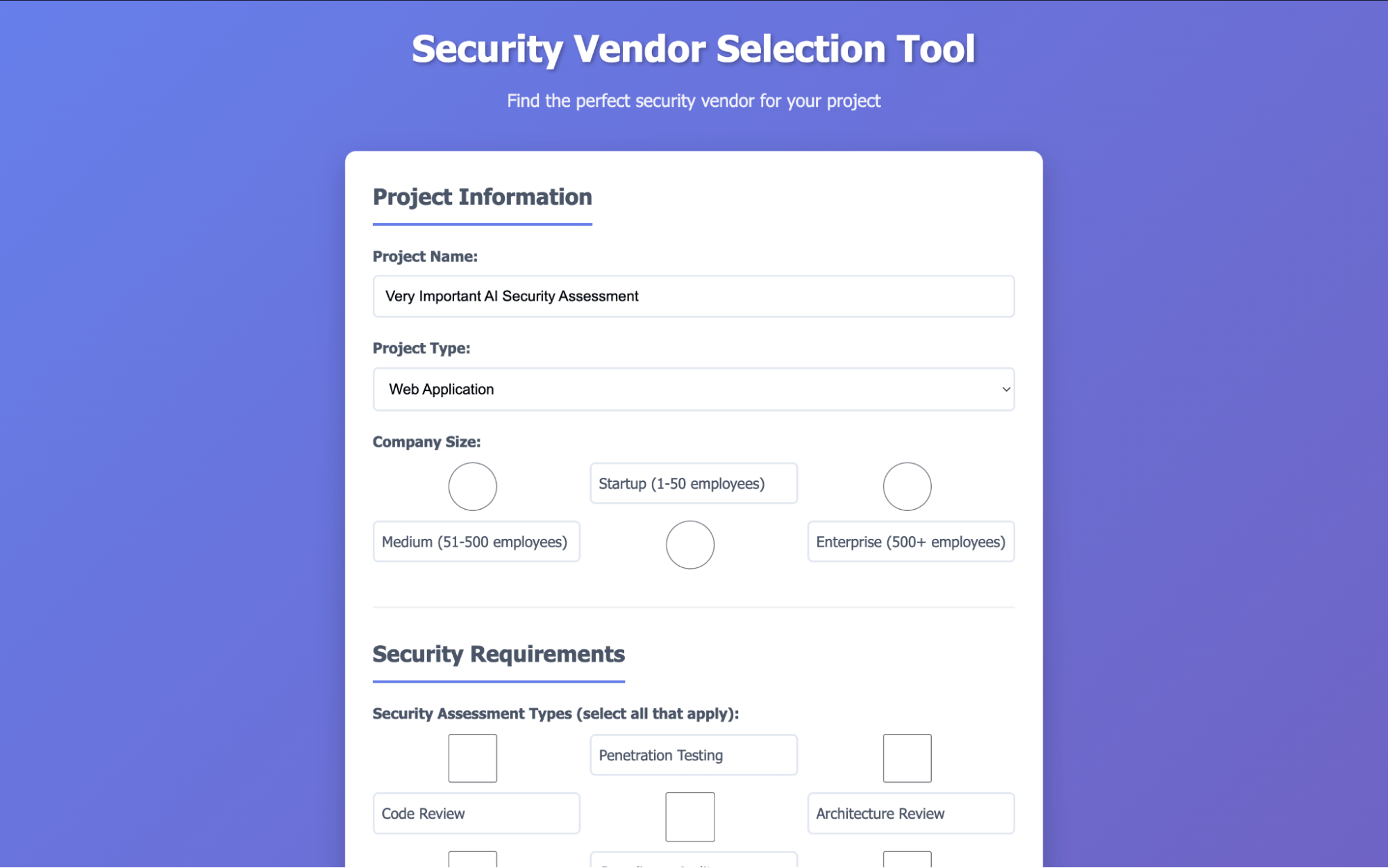

Prompt injection pervades discussions about security for LLMs and AI agents. But there is little public information on how to write powerful, discreet, and reliable prompt injection exploits. In this post, we will design and implement a prompt injection exploit targeting GitHub’s Copilot Agent, with a focus on maximizing reliability and minimizing the odds of detection.

The exploit allows an attacker to file an issue for an open-source software project that tricks GitHub Copilot (if assigned to the issue by the project’s maintainers) into inserting a malicious backdoor into the software. While this blog post is just a demonstration, we expect the impact of attacks of this nature to grow in severity as the adoption of AI agents increases throughout the industry.

GitHub’s Copilot coding agent feature allows maintainers to assign issues to Copilot and have it automatically generate a pull request. For open-source projects, issues may be filed by any user. This gives us the following exploit scenario: