17× Greener: How One AI Model Could’ve Saved 15,225 kg CO₂e

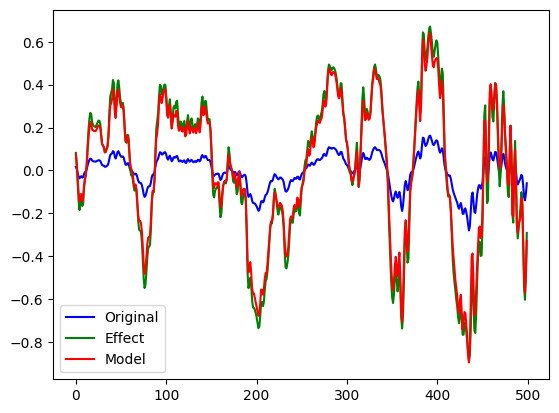

Training AI models in cleaner regions can cut emissions by over 90%. Stable Diffusion could have saved 15,225 kg CO₂e using smarter compute and saved $150k.

Confirmed by Emad Mostaque, former CEO of Stability AI, the next version (0.5) of Stable Diffusion cost an eye-watering $600,000 to train. We can reasonably estimate that version 0.4 had a similar cost profile, likely running at around $4/hour; highlighting just how expensive training large AI models can be.

Running this model in a region like Paris would have produced just 900 kg of CO₂e, compared to 16,125 kg in us-east-1. That’s over a 90% reduction in emissions, equivalent to 15 flights from New York to London, and a potential cost saving of over $150,000.

CarbonRunner doesn’t just bring carbon-aware infrastructure to CI/CD workflows on GitHub Actions — we also offer a powerful API to help you train AI/ML models in the lowest-carbon regions across multiple clouds.

Stable Diffusion burned 15,225 kg CO₂e on US-East. The same training on a low-carbon grid would’ve cut emissions by 94%. Clean compute isn’t slower — just smarter and cheaper!