School of Engineering & Applied Science

Large language models (LLMs) are increasingly automating tasks like translation, text classification and customer service. But tapping into an LLM’s power typically requires users to send their requests to a centralized server — a process that’s expensive, energy-intensive and often slow.

Now, researchers have introduced a technique for compressing an LLM’s reams of data, which could increase privacy, save energy and lower costs.

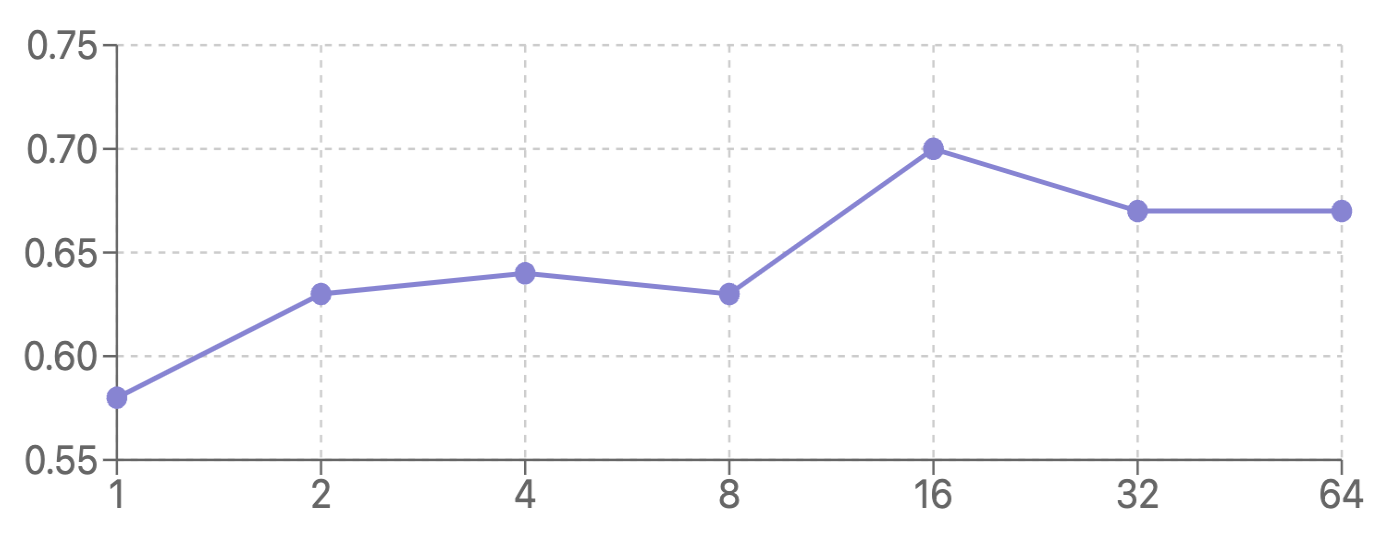

The new algorithm, developed by engineers at Princeton and Stanford Engineering, works by trimming redundancies and reducing the precision of an LLM’s layers of information. This type of leaner LLM could be stored and accessed locally on a device like a phone or laptop and could provide performance nearly as accurate and nuanced as an uncompressed version.

“Any time you can reduce the computational complexity, storage and bandwidth requirements of using AI models, you can enable AI on devices and systems that otherwise couldn’t handle such compute- and memory-intensive tasks,” said study coauthor Andrea Goldsmith, dean of Princeton’s School of Engineering and Applied Science and Arthur LeGrand Doty Professor of Electrical and Computer Engineering.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24533927/STK149_AI_Writing.jpg)