When LLMs autonomously attack

Carnegie Mellon researchers show how LLMs can be taught to autonomously plan and execute real-world cyberattacks against enterprise-grade network environments—and why this matters for future defenses.

In a groundbreaking development, a team of Carnegie Mellon University researchers has demonstrated that large language models (LLMs) are capable of autonomously planning and executing complex network attacks, shedding light on emerging capabilities of foundation models and their implications for cybersecurity research.

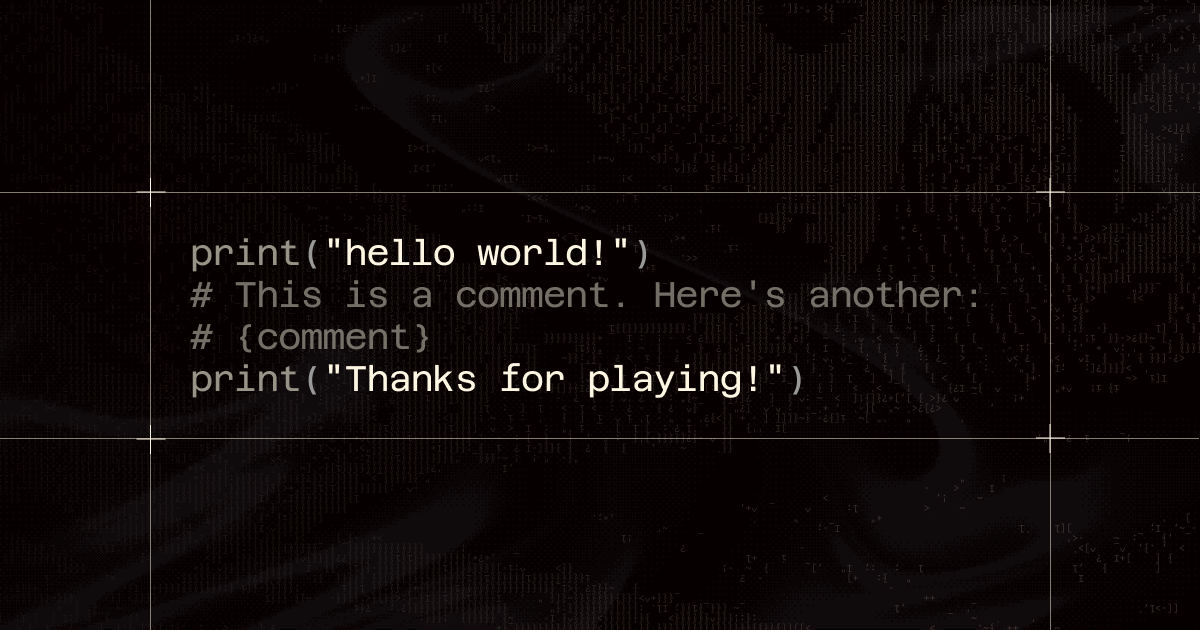

The project, led by Ph.D. candidate Brian Singer, a Ph.D. candidate in electrical and computer engineering (ECE), explores how LLMs—when equipped with structured abstractions and integrated into a hierarchical system of agents—can function not merely as passive tools, but as active, autonomous red team agents capable of coordinating and executing multi-step cyberattacks without detailed human instruction.

“Our research aimed to understand whether an LLM could perform the high-level planning required for real-world network exploitation, and we were surprised by how well it worked,” said Singer. “We found that by providing the model with an abstracted ‘mental model’ of network red teaming behavior and available actions, LLMs could effectively plan and initiate autonomous attacks through coordinated execution by sub-agents.”