What Happens When AI Joins the O rg Chart?

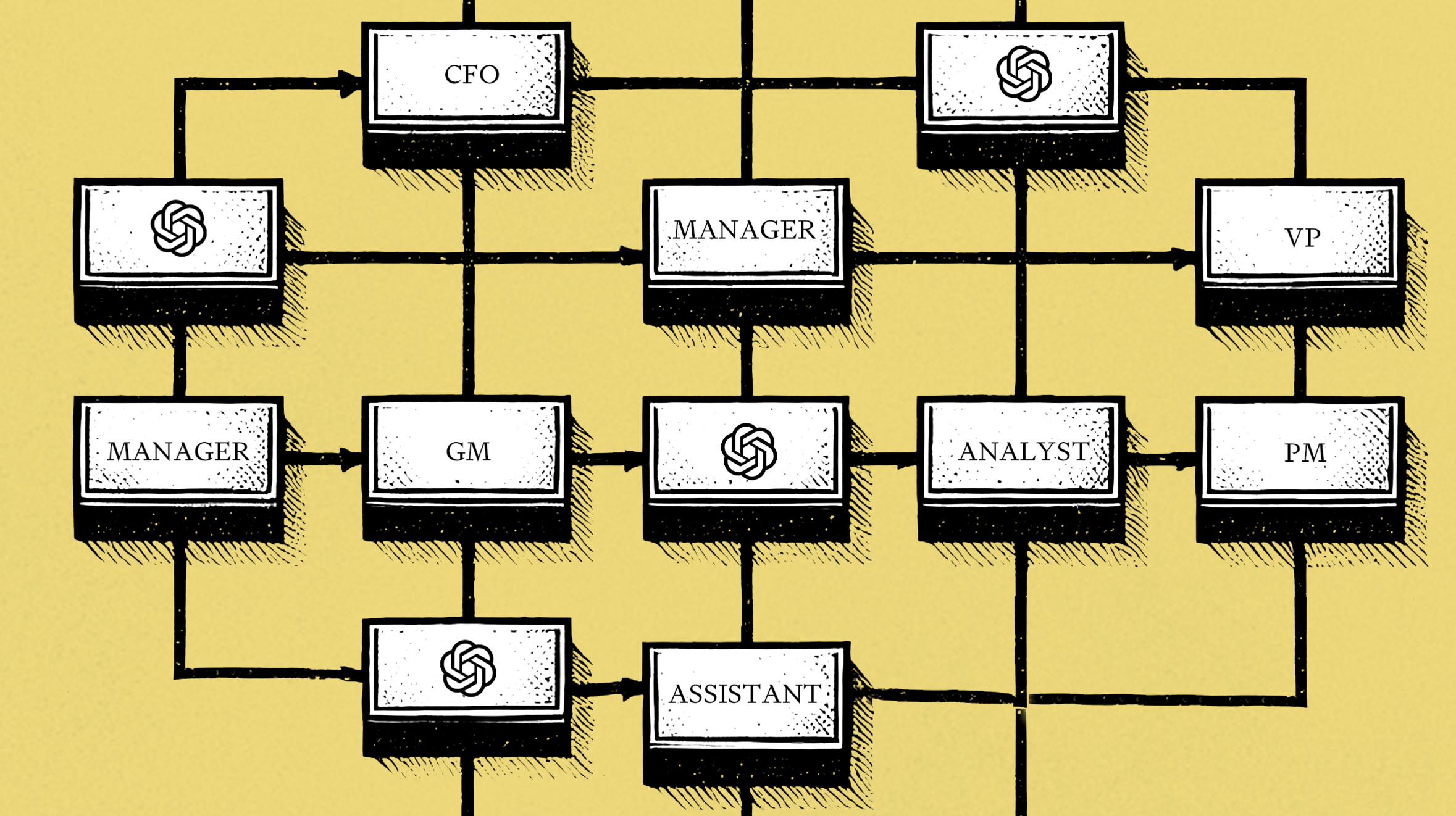

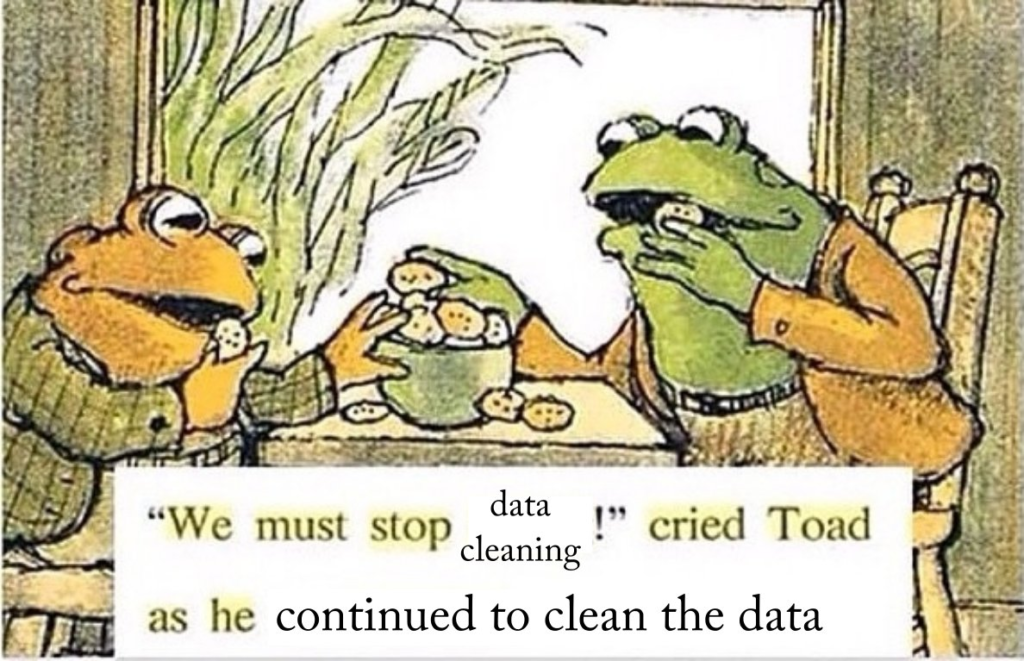

Was this newsletter forwarded to you? Sign up to get it in your inbox.On the first weekend of the new year, OpenAI CEO Sam Altman published a post on his personal blog. The post, titled “Reflections,” is a sweeping look back at the journey of OpenAI and the potential of artificial intelligence. But the internet (and I) quickly zeroed in on one passage in particular: “We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents ‘join the workforce’ and materially change the output of companies.” It seems the OpenAI team is determined to make AI coworkers happen. And if OpenAI is working on it, we’d better pay attention. OpenAI isn’t the only org anthropomorphizing AI tools as “workers,” either: From Silicon Valley giants such Google, Microsoft, and IBM to startups like Glean and Lindy, tech companies are racing to develop the “AI coworkers” that will populate our virtual workspaces. In July 2024, the software company Lattice made headlines when it declared on its blog that it would be the first organization to give AI workers official employee records. According to Lattice CEO Sarah Franklin, these AI “employees” would be onboarded, trained, given goals, and managed just like human employees.Public backlash to the Lattice announcement was swift and fierce. Even other AI execs joined the pile-on: “Treating AI agents as employees disrespects the humanity of your real employees,” one chief of staff at an AI sales platform told The Guardian. “Worse, it implies that you view humans simply as ‘resources’ to be optimized and measured against machines.”Three days after the initial announcement (and before the company could provide any more details), Lattice quietly walked the idea back. For all the backlash, and all the handwriting about AI “taking our jobs,” many of us have already invited the technology into our workplaces, albeit in ways that feel less prone to lead us into an episode of Black Mirror. AI systems draft emails, brainstorm ideas, analyze data, and even attend meetings. But as these tools evolve from assistants to something more autonomous, we’re confronted with a question that goes beyond utility: What does it mean to work alongside something that isn’t human? And when should we worry that AI is creeping up the org chart?The implications are enormous. AI promises to free us from drudgery and amplify our potential, but it could also erode trust, distort accountability, and fundamentally reshape how we define work. And that’s before we even get to the idea of AI managers or executives. So, as AI joins us at work, it’s worth asking: Will it be our most valuable coworker—or our most threatening one? Sponsored by: Every Tools for a new generation of builders When you write a lot about AI like we do, it’s hard not to see opportunities. We build tools for our team to become faster and better. When they work well, we bring them to our readers, too. We have a hunch: If you like reading Every, you’ll like what we’ve made. Automate repeat writing with Spiral. Organize files with Sparkle. Write something new—and great—with Lex. Want to sponsor Every? Click here. Meet your ‘model’ employeesThe optimistic vision of artificial intelligence in the workplace goes something like this: The rise of AI means that human workers are freed from the grind of low-level tasks, leaving more room for creativity, strategic thinking, and work that feels truly meaningful. In this version of the future, everyone gets their own AI “direct report”—a hyper-competent assistant to handle scheduling, follow-up emails, and rote tasks like data consolidation. The human can then focus on the things that really matter.This isn’t just fantasy. Gallup found that the most common tasks people delegate to AI today include brainstorming, admin, and research—things that might once have landed on the plate of a junior colleague. People are even sending their AI assistants to meetings, so that’s one oft-bemoaned office task that AI can take off workers’ plates.But the line between “assistant” and “replacement” is already blurring. Startups like Artisan and Alta are rolling out AI sales development representatives who don’t merely assist with calls but actively conduct them. These “AI SDRs” boast tireless work ethics: they never sleep, never forget to follow up, and certainly never complain about burnout.It’s easy to see why companies find this appealing—but what about the humans they’re meant to help? Some people think AI assistants are as likely to be your “office nemesis” as they are to be your buddy. According to Fortune, AI assistants are already “ratting out” their human managers for “badmouthing” coworkers. The Washington Post similarly reported stories of AI transcription services offered by Zoom and Otter.ai “blabbing” embarrassing or confidential information when meeting participants had left the call, or the speaker was on mute. Workplace surveillance is nothing new, but AI introduces a degree of seamlessness and invisibility that could easily lead workers to say too much before they realize that they have. Proponents praise the seamlessness of AI—but maybe a little friction is a good thing. Your AI coworkers never need a coffee breakNot every vision of workplace AI limits it to the bottom of the org chart. Some companies, like Magic AI, market their products as “AI teammates,” designed to work alongside humans, not beneath them. The idea is intriguing: an always-available, hyper-efficient partner who can brainstorm, research, and execute without needing a lunch break or PTO.In some cases—maybe a lot of cases—the distinction between AI “assistant” and “teammate” may be semantic, more for marketing purposes than to delineate a real difference. But for some people, AI has become a trusted sounding board. “I look at AI as my extra co-worker, as my friend,” a senior research recruiter at Amazon Web Services told Worklife. “Instead of pinging someone on my team saying, ‘Hey, can you brainstorm with me on this?’ I just go to AI first and it’s so much faster than setting up a meeting.”But calling AI a “peer” raises thornier questions. What happens when its outputs are better than yours? Or worse, what happens when it becomes clear your “teammate” isn’t just collaborating with you but actively competing against you? Unlike human colleagues, AI doesn’t have a work-life balance to protect or a sense of fairness to maintain. It can outpace you without effort, subtly creating an expectation of 24/7 productivity that no human can match.Then there’s the issue of trust. While it’s easy to brainstorm with a colleague, AI’s lack of emotional nuance makes it ill-equipped to navigate the gray areas of decision-making. And when mistakes happen—when AI contributes to a bad call or an ethical oversight—who shoulders the blame? Accountability, once a shared experience, becomes murkier in teams that include machines.Help! My manager is a robotMcKinsey calls middle managers “the heart” of a company. But what happens when that heart is made up of ones and zeroes?Some researchers claim that AI managers will be a boon to younger or less experienced workers. AI managers can track progress, send reminders, and provide structured feedback, giving junior employees a reliable and always-available resource as they train up. As organizations flatten and decentralize, this kind of AI oversight could provide structure and support without the need for constant human supervision, creating a system where employees have more autonomy but still feel connected to the organizational mission.Others see value accruing to the upper tiers of the organization. “Someone who is already quite advanced in their career and is already fairly self-motivated may not need a human boss anymore,” noted Phoebe Moore, a business management professor at the University of Essex. But the same qualities that make AI an effective taskmaster can make it an alienating one. Empathy and intuition are central to good management—qualities no algorithm can replicate. Imagine an AI boss delivering constructive criticism without understanding the nuances of tone or timing. What happens to team morale when every piece of feedback feels rigid, clinical, and inhuman?Even more troubling is the risk of bias. While human managers have their own blind spots, algorithms trained on imperfect data sets—say, solely on white male work habits—can amplify inequities rather than mitigate them. If AI managers are rolled out without robust safeguards, they could exacerbate systems of inequality under the guise of “objective decision-making.”The AI in the corner officeWe’ve covered what could happen if AI became your boss, but what about the big boss? Could AI sit in the corner office? This might sound like the most science-fiction idea of all, but with AI increasingly capable of analyzing vast amounts of data, projecting market trends, and even optimizing organizational strategy, some believe AI could take on executive-level responsibilities.AI executives might excel at certain tasks—data-driven decision-making, efficiency optimization—but their strength could be their greatest weakness. An AI that prioritizes the bottom line over all else risks creating workplaces that are ruthlessly efficient but devoid of compassion. After all, if even human CEOs struggle to balance profit and humanity, how can we expect better from an algorithm?The ethical challenges here are impossible to ignore. AI doesn’t have intrinsic values or a sense of right and wrong, which makes it ill-equipped to handle morally messy decisions—like balancing environmental sustainability against profit margins or deciding how to handle layoffs in a way that minimizes harm. An AI might default to chasing the metrics, leaving broader human and societal impacts as an afterthought.And then there’s accountability. If an AI CEO’s decision blows up—causing a scandal, financial disaster, or worse—who takes the fall? The developers? The board? The company itself? Without a clear chain of responsibility, AI leadership could open up a whole new can of legal and reputational worms.Corporate governance would also have to adapt. How do you oversee a leader that isn’t human? What does accountability even look like when decisions are made by a system rather than a person? Without meaningful checks and balances, the risks could easily outweigh the rewards.Is AI the new office politics? As AI climbs the org chart, we are forced to rethink not just our roles but the very nature of work. Can machines truly “collaborate” with humans, or are we destined to remain locked in a dynamic of augmentation and substitution? Will AI help us build better workplaces, or merely faster, more alienating ones?The backlash to Lattice’s announcement about AI “employees” was swift and scathing—and potentially, premature. CEO Sarah Franklin insisted the intent wasn’t to equate AI tools with human employees, but to ensure transparency and accountability as AI becomes a larger presence in the workplace. By creating a distinct type of employee record for AI tools, coded separately from humans, Lattice sought to make their role in organizations visible and governable. “The big misunderstanding was that AI is human,” Franklin explained. “This was about putting governance and guidelines in place.”Perhaps the real lesson of the Lattice controversy is that the discomfort wasn’t about the specifics of the proposal but about AI’s growing role in the workplace. Integrating AI into work won’t be solved by pretending it’s just another tool. It will require systems that safeguard human work, transparency, and accountability. The future of work isn’t about whether AI can be an employee. It’s about whether we, as employees and leaders, are ready to create workplaces where machines and people truly belong.Katie Parrott is a writer, editor, and content marketer focused on the intersection of technology, work, and culture. You can read more of her work in her newsletter. To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.We also build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Write something great with Lex.Subscribe to Every

Sponsored by: Every Tools for a new generation of builders When you write a lot about AI like we do, it’s hard not to see opportunities. We build tools for our team to become faster and better. When they work well, we bring them to our readers, too. We have a hunch: If you like reading Every, you’ll like what we’ve made. Automate repeat writing with Spiral. Organize files with Sparkle. Write something new—and great—with Lex. Want to sponsor Every? Click here.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951345/STK040_VRG_Illo_N_Barclay_3_facebook.jpg)