Frontiers | Detecting the corruption of online questionnaires by artificial intelligence

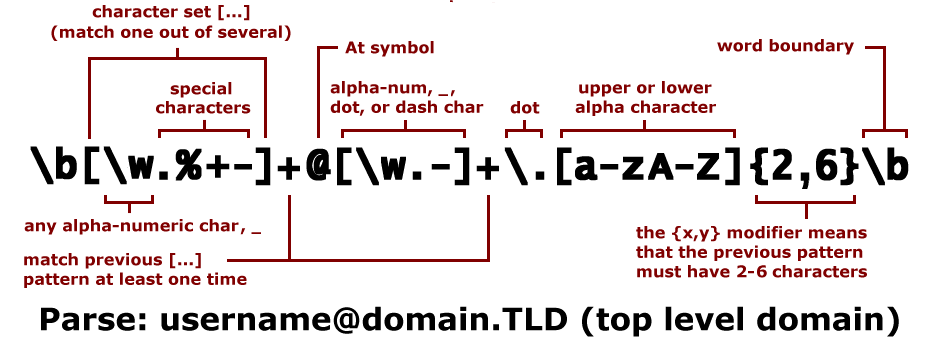

Online questionnaires that use crowdsourcing platforms to recruit participants have become commonplace, due to their ease of use and low costs. Artificial intelligence (AI)-based large language models (LLMs) have made it easy for bad actors to automatically fill in online forms, including generating meaningful text for open-ended tasks. These technological advances threaten the data quality for studies that use online questionnaires. This study tested whether text generated by an AI for the purpose of an online study can be detected by both humans and automatic AI detection systems. While humans were able to correctly identify the authorship of such text above chance level (76% accuracy), their performance was still below what would be required to ensure satisfactory data quality. Researchers currently have to rely on a lack of interest among bad actors to successfully use open-ended responses as a useful tool for ensuring data quality. Automatic AI detection systems are currently completely unusable. If AI submissions of responses become too prevalent, then the costs associated with detecting fraudulent submissions will outweigh the benefits of online questionnaires. Individual attention checks will no longer be a sufficient tool to ensure good data quality. This problem can only be systematically addressed by crowdsourcing platforms. They cannot rely on automatic AI detection systems and it is unclear how they can ensure data quality for their paying clients.

The use of crowdsourcing platforms to recruit participants for online questionnaires has always been susceptible to abuse. Bad actors could randomly click answers to quickly earn money, even at scale. Until recently, a solution to this problem was to ask online participants to complete open-ended responses that could not be provided through random button-clicking. However, the development of large language models, such as ChatGPT or Bard, threatens the viability of this solution. This threat to data quality has to be understood in the wider context of methodological challenges that all add up to what is now famously termed the “replication crisis”.