Chronos: Learning the Language of Time Series

Authors: Abdul Fatir Ansari, Lorenzo Stella, Caner Turkmen, Xiyuan Zhang, Pedro Mercado, Huibin Shen, Oleksandr Shchur, Syama Sundar Rangapuram, Sebastian Pineda Arango, Shubham Kapoor, Jasper Zschiegner, Danielle C. Maddix, Michael W. Mahoney, Kari Torkkola, Andrew Gordon Wilson, Michael Bohlke-Schneider, Yuyang Wang Paper: https://arxiv.org/abs/2403.07815 Code & Models: https://github.com/amazon-science/chronos-forecasting

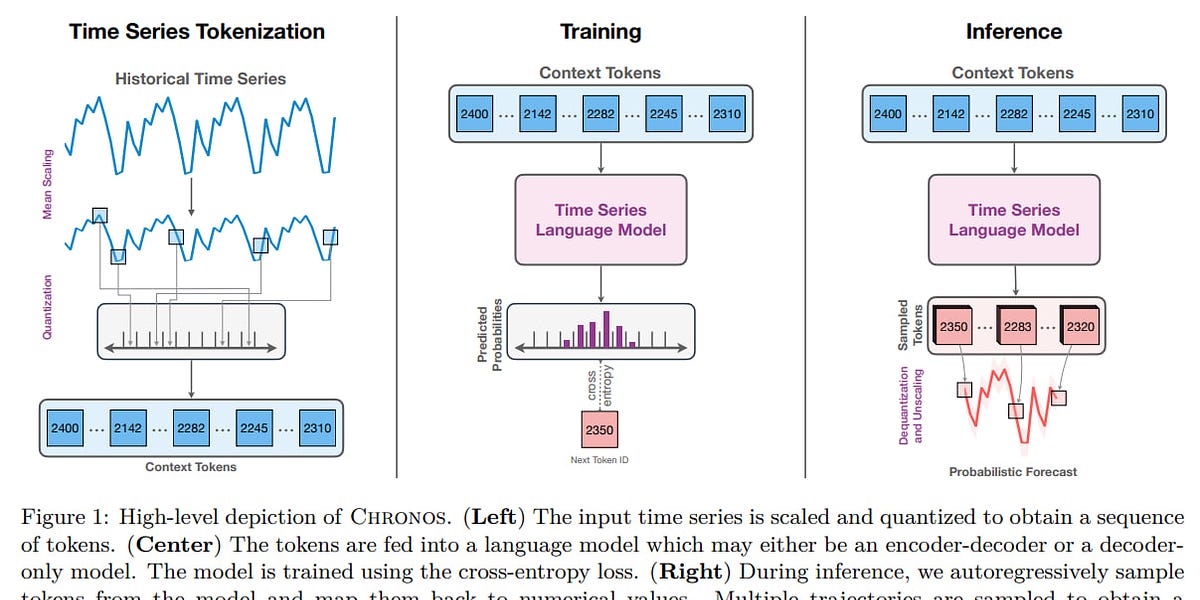

TLDR: Chronos is a pre-trained transformer-based language model for time series, representing the series as a sequence of tokens. It builds on the T5 architecture and varies in size from 20M to 710M parameters.

Time series is a vast and intriguing area. While less prolific than fields like NLP or CV, it regularly features new works. Previously, RNNs were highly popular (with a resurgence now through SSMs), and even before that, models like ARIMA were common. Facebook’s Prophet library is another well-known example. There have been many approaches with specialized architectures, such as Temporal Convolutional Networks (TCN) used for weather forecasting and beyond, or the transformer-like Informer. Surely, there’s much more.

In the past couple of years, there have been numerous attempts to apply large language models (LLMs) in various forms, from straightforward applications through GPT-3, Time-LLM, and PromptCast, to more specialized ones like Lag-Llama or TimesFM, among many others.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25447271/Gemini_Astra.jpg)