Fine-tuning OpenAI models

The prompt is simplified for the sake of this example. In reality, the prompt was trying to extract different types of entities from the user input, and provided many more examples.

I then built a test suite that would cover various edge cases and compared the results of the fine-tuned model with the non-tuned model.

I used this to quickly sanity check the accuracy of the fine-tuned model vs the non-tuned model. It was also helpful to write out the test cases by hand as it helped me to think through the problem and understand the edge cases.

However, it still takes anywhere from 300ms to 500ms to produce a response. In contrast, we get 100-200ms with Groq without fine-tuning.

So the cost of using the fine-tuned model is about 6 times higher than the cost of using the gpt-3.5-turbo model. However, fine-tuned model requires far fewer system prompt tokens to produce a response. Continuing with our example, using fine-tuned model my system prompt is 300 tokens long, while I need system prompt of approx. 1,500 tokens to get equivalent results with gpt-4-turbo. (I was not able to get reliable results with gpt-3.5-turbo without fine-tuning.)

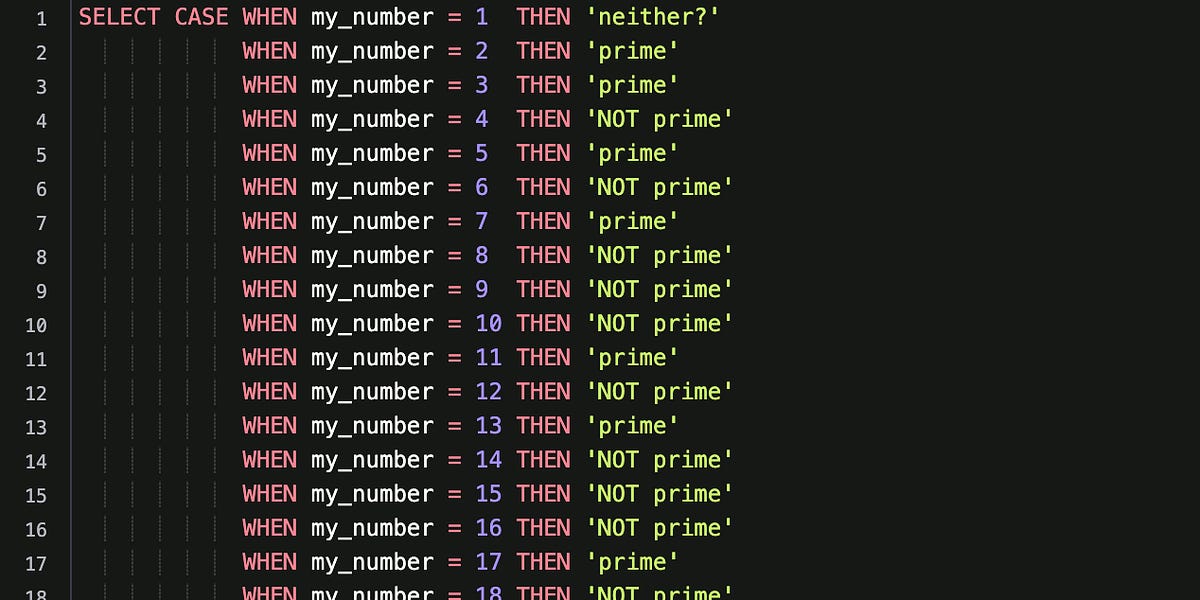

When I started reading about fine-tuning, the thing that threw me off is that a lot of the examples insisted that the training prompt should reflect how the model is going to be used.